Blog

- EEG Can Be Used For Cyber Security

- By Jason von Stietz, M.A.

- October 29, 2016

-

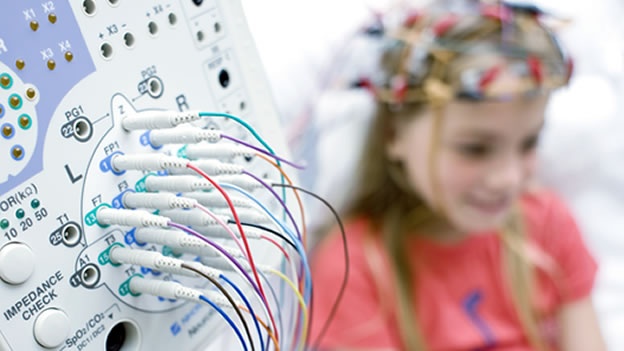

Getty Images Finger print scans are widely used as a method of proving identification and can even be used to access a secured cyber system. However, finger print scans can be stolen or replicated. So, what is the next step in cyber security? Researchers are currently investigating how EEG can be used as a means of biometric authentication. The research was discussed in a recent article in Neuroscience News:

Cyber security and authentication have been under attack in recent months as, seemingly every other day, a new report of hackers gaining access to private or sensitive information comes to light. Just recently, more than 500 million passwords were stolen when Yahoo revealed its security was compromised.

Securing systems has gone beyond simply coming up with a clever password that could prevent nefarious computer experts from hacking into your Facebook account. The more sophisticated the system, or the more critical, private information that system holds, the more advanced the identification system protecting it becomes.

Fingerprint scans and iris identification are just two types of authentication methods, once thought of as science fiction, that are in wide use by the most secure systems. But fingerprints can be stolen and iris scans can be replicated. Nothing has proven foolproof from being subject to computer hackers.

“The principal argument for behavioral, biometric authentication is that standard modes of authentication, like a password, authenticates you once before you access the service,” said Abdul Serwadda a cybersecurity expert and assistant professor in the Department of Computer Science at Texas Tech University.

“Now, once you’ve accessed the service, there is no other way for the system to still know it is you. The system is blind as to who is using the service. So the area of behavioral authentication looks at other user-identifying patterns that can keep the system aware of the person who is using it. Through such patterns, the system can keep track of some confidence metric about who might be using it and immediately prompt for reentry of the password whenever the confidence metric falls below a certain threshold.”

One of those patterns that is growing in popularity within the research community is the use of brain waves obtained from an electroencephalogram, or EEG. Several research groups around the country have recently showcased systems which use EEG to authenticate users with very high accuracy.

However, those brain waves can tell more about a person than just his or her identity. It could reveal medical, behavioral or emotional aspects of a person that, if brought to light, could be embarrassing or damaging to that person. And with EEG devices becoming much more affordable, accurate and portable and applications being designed that allows people to more readily read an EEG scan, the likelihood of that happening is dangerously high.

“The EEG has become a commodity application. For $100 you can buy an EEG device that fits on your head just like a pair of headphones,” Serwadda said. “Now there are apps on the market, brain-sensing apps where you can buy the gadget, download the app on your phone and begin to interact with the app using your brain signals. That led us to think; now we have these brain signals that were traditionally accessed only by doctors being handled by regular people. Now anyone who can write an app can get access to users’ brain signals and try to manipulate them to discover what is going on.”

That’s where Serwadda and graduate student Richard Matovu focused their attention: attempting to see if certain traits could be gleaned from a person’s brain waves. They presented their findings recently to the Institute of Electrical and Electronics Engineers (IEEE) International Conference on Biometrics.

Brain waves and cybersecuritySerwadda said the technology is still evolving in terms of being able to use a person’s brain waves for authentication purposes. But it is a heavily researched field that has drawn the attention of several federal organizations. The National Science Foundation (NSF), funds a three-year project on which Serwadda and others from Syracuse University and the University of Alabama-Birmingham are exploring how several behavioral modalities, including EEG brain patterns, could be leveraged to augment traditional user authentication mechanisms.

“There are no installations yet, but a lot of research is going on to see if EEG patterns could be incorporated into standard behavioral authentication procedures,” Serwadda said

Assuming a system uses EEG as the modality for user authentication, typically for such a system, all variables have been optimized to maximize authentication accuracy. A selection of such variables would include:

The features used to build user templates.

The signal frequency ranges from which features are extracted.

The regions of the brain on which the electrodes are placed, among other variables.Under this assumption of a finely tuned authentication system, Serwadda and his colleagues tackled the following questions:

If a malicious entity were to somehow access templates from this authentication-optimized system, would he or she be able to exploit these templates to infer non-authentication-centric information about the users with high accuracy?

In the event that such inferences are possible, which attributes of template design could reduce or increase the threat?Turns out, they indeed found EEG authentication systems to give away non-authentication-centric information. Using an authentication system from UC-Berkeley and a variant of another from a team at Binghamton University and the University of Buffalo, Serwadda and Matovu tested their hypothesis, using alcoholism as the sensitive private information which an adversary might want to infer from EEG authentication templates.

In a study involving 25 formally diagnosed alcoholics and 25 non-alcoholic subjects, the lowest error rate obtained when identifying alcoholics was 25 percent, meaning a classification accuracy of approximately 75 percent.

When they tweaked the system and changed several variables, they found that the ability to detect alcoholic behavior could be tremendously reduced at the cost of slightly reducing the performance of the EEG authentication system.

Motivation for discovery

Serwadda’s motivation for proving brain waves could be used to reveal potentially harmful personal information wasn’t to improve the methods for obtaining that information. It’s to prevent it.

To illustrate, he gives an analogy using fingerprint identification at an airport. Fingerprint scans read ridges and valleys on the finger to determine a person’s unique identity, and that’s it.

In a hypothetical scenario where such systems could only function accurately if the user’s finger was pricked and some blood drawn from it, this would be problematic because the blood drawn by the prick could be used to infer things other than the user’s identity, such as whether a person suffers from certain diseases, such as diabetes.

Given the amount of extra information that EEG authentication systems are able glean about the user, current EEG systems could be likened to the hypothetical fingerprint reader that pricks the user’s finger. Serwadda wants to drive research that develops EEG authentication systems that perform the intended purpose while revealing minimal information about traits other than the user’s identity in authentication terms.

Currently, in the vast majority of studies on the EEG authentication problem, researchers primarily seek to outdo each other in terms of the system error rates. They work with the central objective of designing a system having error rates which are much lower than the state-of-the-art. Whenever a research group develops or publishes an EEG authentication system that attains the lowest error rates, such a system is immediately installed as the reference point.

A critical question that has not seen much attention up to this point is how certain design attributes of these systems, in other words the kinds of features used to formulate the user template, might relate to their potential to leak sensitive personal information. If, for example, a system with the lowest authentication error rates comes with the added baggage of leaking a significantly higher amount of private information, then such a system might, in practice, not be as useful as its low error rates suggest. Users would only accept, and get the full utility of the system, if the potential privacy breaches associated with the system are well understood and appropriate mitigations undertaken.

But, Serwadda said, while the EEG is still being studied, the next wave of invention is already beginning.

“In light of the privacy challenges seen with the EEG, it is noteworthy that the next wave of technology after the EEG is already being developed,” Serwadda said. “One of those technologies is functional near-infrared spectroscopy (fNIRS), which has a much higher signal-to-noise ratio than an EEG. It gives a more accurate picture of brain activity given its ability to focus on a particular region of the brain.”

The good news, for now, is fNIRS technology is still quite expensive; however there is every likelihood that the prices will drop over time, potentially leading to a civilian application to this technology. Thanks to the efforts of researchers like Serwadda, minimizing the leakage of sensitive personal information through these technologies is beginning to gain attention in the research community.

“The basic idea behind this research is to motivate a direction of research which selects design parameters in such a way that we not only care about recognizing users very accurately but also care about minimizing the amount of sensitive personal information it can read,” Serwadda said.

Read the original article here

- Comments (0)

- Impact Of Baby's Cries on Cognition

- By Jason von Stietz, M.A.

- July 20, 2016

-

Getty Images People often refer to “parental instincts” as an innate drive to care for one’s offspring. However, we know little of the role cognition play in this instinct. Researchers at the University of Toronto examined the impact of the sound of a baby’s cries on performance during a cognitive task. The study was discussed in a recent article in Neuroscience Stuff:

“Parental instinct appears to be hardwired, yet no one talks about how this instinct might include cognition,” says David Haley, co-author and Associate Professor of psychology at U of T Scarborough.

“If we simply had an automatic response every time a baby started crying, how would we think about competing concerns in the environment or how best to respond to a baby’s distress?”

The study looked at the effect infant vocalizations—in this case audio clips of a baby laughing or crying—had on adults completing a cognitive conflict task. The researchers used the Stroop task, in which participants were asked to rapidly identify the color of a printed word while ignoring the meaning of the word itself. Brain activity was measured using electroencephalography (EEG) during each trial of the cognitive task, which took place immediately after a two-second audio clip of an infant vocalization.

The brain data revealed that the infant cries reduced attention to the task and triggered greater cognitive conflict processing than the infant laughs. Cognitive conflict processing is important because it controls attention—one of the most basic executive functions needed to complete a task or make a decision, notes Haley, who runs U of T’s Parent-Infant Research Lab.

“Parents are constantly making a variety of everyday decisions and have competing demands on their attention,” says Joanna Dudek, a graduate student in Haley’s Parent-Infant Research Lab and the lead author of the study.

“They may be in the middle of doing chores when the doorbell rings and their child starts to cry. How do they stay calm, cool and collected, and how do they know when to drop what they’re doing and pick up the child?”

A baby’s cry has been shown to cause aversion in adults, but it could also create an adaptive response by “switching on” the cognitive control parents use in effectively responding to their child’s emotional needs while also addressing other demands in everyday life, adds Haley.

“If an infant’s cry activates cognitive conflict in the brain, it could also be teaching parents how to focus their attention more selectively,” he says.

“It’s this cognitive flexibility that allows parents to rapidly switch between responding to their baby’s distress and other competing demands in their lives—which, paradoxically, may mean ignoring the infant momentarily.”

The findings add to a growing body of research suggesting that infants occupy a privileged status in our neurobiological programming, one deeply rooted in our evolutionary past. But, as Haley notes, it also reveals an important adaptive cognitive function in the human brain.

Read the original article here

- Comments (0)

- Studying Prejudice Using EEG

- By Jason von Stietz, M.A.

- March 5, 2016

-

Getty Images Generally, people hold more positive associations toward members of their “in-group” than of an outgroup. For example, sports fan would be quicker to bring to mind words such as “talented” or “great” when describing their favorite team than when describing other teams. This same process has been found to play out when relating to cultural or ethnic in-groups. People are quicker to bring to mind positive associations when presented with an image of someone from their own cultural background. The literature has suggested that this process is involved in unconscious prejudice in which individuals are slower to relate positive attributes to people who are different from them. Researchers from University of Bern investigated this process using EEG. The study was discussed in a recent article in NeuroscienceNews:

We do not always say what we think: we like to hide certain prejudices, sometimes even from ourselves. But unconscious prejudices become visible with tests, because we need a longer time if we must associate unpleasant things with positive terms. Researchers in Bern now show that additional processes in the brain are not responsible for this, but some of them simply take longer.

A soccer fan needs more time to associate a positive word with an opposing club than with his own team. And supporters of a political party associate a favourable attribute faster with their party than with political rivals – even if they endeavour towards the opposite. It is long since known that a positive association with one’s own group, an “in-group”, happens unconsciously faster than with an “outgroup”. These different reaction times become visible in the Implicit Association Test (IAT) with which psychologists examine unconscious processes and prejudices. But why the effort to address a friendly word to an outgroup takes more time was not clear up to now.

Now a team headed by Prof. Daria Knoch from the Department of Social Psychology and SocialNeuroscience at the Institute of Psychology, University of Bern, shows that an additional mental process is not responsible for this, as has often been postulated – but rather the brain lingers longer in certain processes. The study has now been published in the scientific journal PNAS.

Number and sequence of processes are exactly the same

The researchers relied on a unique combination of methods for their study: they conducted an Implicit Association Test with 83 test subjects who are soccer fans or political supporters. While the test persons had to associate positive terms on the screen by means of a button click, either with their in-group or with an outgroup, the brain activity was recorded by means of an EEG (electroen- cephalogram). “We analysed these data with a so-called “microstate analysis”. It enabled us to depict all processes in the brain for the first time – from the presentation of a word up to pressing the button – temporally and also spatially”, explains co-lead author Dr. Lorena Gianotti from the Department of Social Psychology and Social Neuroscience.

The analysis shows the following: the brain runs through seven processes, from the presentation of stimulus – i.e. a word – up to button click, in less than one second. “The number and sequences of these processes remain exactly the same, regardless of whether the test subject had to associate positive words with the in-group, i.e. their club or their party, or with an outgroup”, explains co-lead author Dr. Bastian Schiller, who is in the meantime conducting research at the University of Freiburg.

The reaction time with the outgroup situation is therefore longer, because some of the seven processes take longer – and not because a new process is switched in between. “As a result, corresponding theories can be refuted”, says Schiller. A complete consideration of all processes in the brain is essential for an interpretation, emphasises Lorena Gianotti, and she illustrated this in the following example: on Monday after work you go out to eat with a friend and go to sleep afterwards at 10 pm. On Friday you do exactly the same thing – but you come home two hours later since you can sleep late on the next day. If you now compare the days at 8 pm, both times you were in a restaurant and one could conclude that this is an identical time schedule. If the comparison takes place at 11 pm, you are one time already in bed and one time still on the go. One could think that on Friday you were perhaps still in the sports studio or had an entirely different daily schedule. Therefore it is clear that selective considerations do not allow any conclusion with regard to the entire day – neither with regard to the sequence nor the activities.

“In the research of human behaviour it is essential to consider the underlying brain mechanisms. And this in turn requires suitable methods in order to gain comprehensive findings”, summarises study leader Daria Knoch. A combination of neuroscientific and psychological methods can lead to new insights.

Read the original article Here

- Comments (0)

- Brainwaves Spread Through Mild Electrical Field

- By Jason von Stietz, M.A.

- January 15, 2016

-

iStockPhoto Scientists once thought that the brain’s electrical endogenous fields were too weak to propagate transmission. However, recent findings from researchers from Case Western Reserve University suggested that brainwaves spread through a mild electrical field. The study was discussed in a recent article in MedicalXpress:

Researchers at Case Western Reserve University may have found a new way information is communicated throughout the brain.

Their discovery could lead to identifying possible new targets to investigate brain waves associated with memory and epilepsy and better understand healthy physiology.

They recorded neural spikes traveling at a speed too slow for known mechanisms to circulate throughout the brain. The only explanation, the scientists say, is the wave is spread by a mild electrical field they could detect. Computer modeling and in-vitro testing support their theory.

"Others have been working on such phenomena for decades, but no one has ever made these connections," said Steven J. Schiff, director of the Center for Neural Engineering at Penn State University, who was not involved in the study. "The implications are that such directed fields can be used to modulate both pathological activities, such as seizures, and to interact with cognitive rhythms that help regulate a variety of processes in the brain."

Scientists Dominique Durand, Elmer Lincoln Lindseth Professor in Biomedical Engineering at Case School of Engineering and leader of the research, former graduate student Chen Sui and current PhD students Rajat Shivacharan and Mingming Zhang, report their findings in The Journal of Neuroscience.

"Researchers have thought that the brain's endogenous electrical fields are too weak to propagate wave transmission," Durand said. "But it appears the brain may be using the fields to communicate without synaptic transmissions, gap junctions or diffusion."

How the fields may work

Computer modeling and testing on mouse hippocampi (the central part of the brain associated with memory and spatial navigation) in the lab indicate the field begins in one cell or group of cells.

Although the electrical field is of low amplitude, the field excites and activates immediate neighbors, which, in turn, excite and activate immediate neighbors, and so on across the brain at a rate of about 0.1 meter per second.

Blocking the endogenous electrical field in the mouse hippocampus and increasing the distance between cells in the computer model and in-vitro both slowed the speed of the wave.

These results, the researchers say, confirm that the propagation mechanism for the activity is consistent with the electrical field.

Because sleep waves and theta waves—which are associated with forming memories during sleep—and epileptic seizure waves travel at about 1 meter per second, the researchers are now investigating whether the electrical fields play a role in normal physiology and in epilepsy.

If so, they will try to discern what information the fields may be carrying. Durand's lab is also investigating where the endogenous spikes come from.

Read the original article Here

- Comments (1)

- fMRI and EEG Study of Decision-Making Processes in Brain

- By Jason von Stietz, M.A.

- September 19, 2015

-

Photo Credit: Getty Images Researchers at the Institute for Neuroscience and Psychology at the University of Glasgow investigated the processes in the brain related to learning to avoid making mistakes and learning to make god decisions. The study simultaneously utilized EEG and fMRI allowing researchers to study decision-making in the brain with both the high temporal precision offered by EEG and the ability to detect precisely where these process are taking place in the brain offered by fMRI. The study was discussed in Neuroscience News:

Imagine picking wild berries in a forest when suddenly a swarm of bees flies out from behind a bush. In a split second, your motor system has already reacted to flee the swarm. This automatic response – acting before thinking – constitutes a powerful survival mechanism to avoid imminent danger.

In turn, a separate, more deliberate process of learning to avoid similar situations in the future will also occur, rendering future berry-picking attempts unappealing. This more deliberate, “thinking” process will assist in re-evaluating an outcome and adjusting how rewarding similar choices will be in the future.

“To date the biological validity and neural underpinnings of these separate value systems remain unclear,” said Dr Marios Philiastides, who led the work published in the journalNature Communications.

In order to understand the neuronal basis of these systems, Dr. Philiastides’ team devised a novel state-of-the-art brain imaging procedure.

Specifically, they hooked up volunteers to an EEG machine (to measure brain electrical activity) while they were concurrently being scanned in an MRI machine.

An EEG machine records brain activity with high temporal precision (“when” things are happening in the brain) while functional MRI provides information on the location of this activity (“where” things are happening in the brain). To date, “when” and “where” questions have largely been studied separately, using each technique in isolation.

Dr. Philiastides’ lab is among the pioneering groups that have successfully combined the two techniques to simultaneously provide answers to both questions.

The ability to use EEG, which detects tiny electrical signals on the scalp, in an MRI machine, which generates large electromagnetic interference, hinges largely on the team’s ability to remove the ‘noise’ produced by the scanner.

During these measurements participants were shown a series of pairs of symbols and asked to choose the one they believed was more profitable (the one which earned them more points).

They performed this task through trial and error by using the outcome of each choice as a learning signal to guide later decisions. Picking the correct symbol rewarded them with points and increased the sum of money paid to them for taking part in the study while the other symbol did not.

To make the learning process more challenging and to keep participants engaged with the task, there was a probability that on 30% of occasions even the correct symbol would incur a penalty.

The results showed two separate (in time and space) but interacting value systems associated with reward-guided learning in the human brain.

The data suggests that an early system responds preferentially to negative outcomes only in order to initiate a fast automatic alertness response. Only after this initial response, a slower system takes over to either promote avoidance or approach learning, following negative and positive outcomes, respectively.

Critically, when negative outcomes occur, the early system down-regulates the late system so that the brain can learn to avoid repeating the same mistake and to readjust how rewarding similar choices would “feel” in the future.

The presence of these separate value systems suggests that different neurotransmitter pathways might modulate each system and facilitate their interaction, said Elsa Fouragnan, the first author of the paper.

Dr Philiastides added: “Our research opens up new avenues for the investigation of the neural system underlying normal as well as maladaptive decision making in humans. Crucially, their findings have the potential to offer an improved understanding of how everyday responses to rewarding or stressful events can affect our capacity to make optimal decisions. In addition, the work can facilitate the study of how mental disorders associated with impairments in engaging with aversive outcomes (such as chronic stress, obsessive-compulsive disorder, post-traumatic disorder and depression), affect learning and strategic planning.

Read the original article Here

- Comments (0)

- Sleep and Impulse Control Issues Related to Spindling Excessive Beta

- By Jason von Stietz

- June 12, 2015

-

Photo Credit: Getty Images Can EEG phenotypes predict issues with sleep and with impulse control? Brain Science International’s Jay Gunkelman QEEG-D recently co-authored an article on the relationship between the EEG-beta phenotype and behavior. The findings suggested that frontal spindling beta related to sleep maintenance issues and impulse control problems. The article was published in the peer reviewed journal Neuropsychiatric Electrophysiology:

Abstract

Background

In 2009 the United States National Institute of Mental Health (NIMH) introduced the Research Domain Criteria (RDoC) project, which intends to explicate fundamental bio-behavioral dimensions that cut across heterogeneous disorder categories in psychiatry. One major research domain is defined by arousal and regulatory systems.

Methods

In this study we aimed to investigate the relation between arousal systems (EEG-beta phenotypes also referred to as spindling excessive beta (SEB), beta spindles or sub-vigil beta) and the behavioral dimensions: insomnia, impulsivity/hyperactivity and attention. This analysis is conducted within a large and heterogeneous outpatient psychiatric population, in order to verify if EEG-beta phenotypes are an objective neurophysiological marker for psychopathological properties shared across psychiatric disorders.

Results

SEBs had an occurrence between 0–10.8% with a maximum occurrence at frontal and central locations, with similar topography for the heterogeneous sample as well as a more homogenous ADHD subgroup. Patients with frontal SEBs only, had significantly higher impulsivity/hyperactivity (specifically on impulse control items) and insomnia complaints with medium effect sizes.

Conclusions

Item level and mediation analysis revealed that sleep maintenance problems explained both frontal SEB EEG patterns (in line with SEB as a sub-vigil or hypoarousal EEG pattern) as well as the impulse control problems. These data thus suggest that frontal SEB might be regarded as a state marker caused by sleep maintenance problems, with concurrent impulse control problems. However, future longitudinal studies should investigate this state-trait issue further and replicate these findings Also studies manipulating SEB by for example neurofeedback and measuring consequent changes in sleep and impulse control could shed further light on this issue.

Read the article online Here

- Comments (0)

- Brain Studies Suggest Ways to Improve Learning

- By Jason von Stietz

- February 6, 2015

-

Photo Credit: Scientific American Do brain training games work? Neuroscientists often study the underlying processes involved in learning in hopes of developing interventions to help children with learning difficulties, as well as to booster learning in other children. However, parent’s and educators are often unsure of what interventions to use, as evidence of their effectiveness are often mixed. Gary Stix discussed studies examining learning processes and interventions in a recent article of Scientific American:

Eight-month-old Lucas Kronmiller has just had the surface of his largely hairless head fitted with a cap of 128 electrodes. A research assistant in front of him is frantically blowing bubbles to entertain him. But Lucas seems calm and content. He has, after all, come here, to the Infancy Studies Laboratory at Rutgers University, repeatedly since he was just four months old, so today is nothing unusual. He—like more than 1,000 other youngsters over the past 15 years—is helping April A. Benasich and her colleagues to find out whether, even at the earliest age, it is possible to ascertain if a child will go on to experience difficulties in language that will prove a burdensome handicap when first entering elementary school.

Benasich is one of a cadre of researchers who have been employing brain-recording techniques to understand the essential processes that underlie learning. The new science of neuroeducation seeks the answers to questions that have always perplexed cognitive psychologists and pedagogues.

How, for instance, does a newborn's ability to process sounds and images relate to the child's capacity to learn letters and words a few years later? What does a youngster's ability for staying mentally focused in preschool mean for later academic success? What can educators do to foster children's social skills—also vital in the classroom? Such studies can complement the wealth of knowledge established by psychological and educational research programs.

They also promise to offer new ideas, grounded in brain science, for making better learners and for preparing babies and toddlers for reading, writing, arithmetic, and survival in the complex social network of nursery school and beyond. Much of this work focuses on the first years of life and the early grades of elementary school because some studies show that the brain is most able to change at that time.

The Aha! Instant

Benasich studies anomalies in the way the brains of the youngest children perceive sound, a cognitive process fundamental to language understanding, which, in turn, forms the basis for reading and writing skills. The former nurse, who later earned two doctorates, focuses on what she calls the aha! instant—an abrupt transition in electrical activity in the brain that signals that something new has been recognized [see “The Aha! Moment,” by Nessa Bryce].Researchers at Benasich's lab in Newark, N.J., expose Lucas and other infants to tones of a certain frequency and duration. They then record a change in the electrical signals generated in the brain when a different frequency is played. Typically the electroencephalogram (EEG) produces a strong oscillation in response to the change—indicating that the brain essentially says, “Yes, something has changed”; a delay in the response time to the different tones means that the brain has not detected the new sound quickly enough.

The research has found that this pattern of sluggish electrical activity at six months can predict language issues at three to five years of age. Differences in activity that persist during the toddler and preschool years can foretell problems in development of the brain circuitry that processes the rapid transitions occurring during perception of the basic units of speech. If children fail to hear or process components of speech—say, a “da” or a “pa”—quickly enough as toddlers, they may lag in “sounding out” written letters or syllables in their head, which could later impede fluency in reading. These findings offer more rigorous confirmation of other research by Benasich showing that children who encounter early problems in processing these sounds test poorly on psychological tests of language eight or nine years later.

If Benasich and others can diagnose future language problems in infants, they may be able to correct them by exploiting the inherent plasticity of the developing brain—its capacity to change in response to new experiences. They may even be able to improve basic functioning for an infant whose brain is developing normally. “The easiest time to make sure that the brain is getting set up in a way that's optimal for learning may be in the first part of the first year,” she says.

Games, even in the crib, could be one answer. Benasich and her team have devised a game toy that trains a baby to react to a change in tone by turning the head or shifting the eyes (detected with a tracking sensor). When the movement occurs, a video snippet plays, a reward for good effort.

In a study reported in 2014 babies who went through this training detected tiny modulations within the sounds faster and more accurately than did children who only listened passively or had no exposure to the sounds at all. Based on this research, Benasich believes that the game would assist infants impaired in processing these sounds to respond more quickly. She is now working on an interactive game that could train infants to perceive rapid sound sequences.

The Number Sense

Flexing cognitive muscles early on may also help infants tune rudimentary math skills. Stanislas Dehaene, a neuroscientist at the French National Institute of Health and Medical Research, is a leader in the field of numerical cognition who has tried to develop ways to help children with early math difficulties. Babies have some capability of recognizing numbers from birth. When the skill is not in place from the beginning, Dehaene says, a child may later have difficulty with arithmetic and higher math. Interventions that build this “number sense,” as Dehaene calls it, may help the slow learner avoid years of difficulty in math class.This line of research contradicts that of famed psychologist Jean Piaget, who contended that the brains of infants are blank slates, or tabula rasa, when it comes to making calculations in the crib. Children, in Piaget's view, have to develop a basic idea of what a number is from years of interacting with blocks, Cheerios or other objects. They eventually learn that when the little oat rings get pushed around a table, the location differs, but the number stays the same.

The neuroscience community has amassed a body of research showing that humans and other animals have a basic numerical sense. Babies, of course, do not spring from the womb performing differential equations in their head. But experiments have found that toddlers will routinely reach for the row of M&Ms that has the most candies. And other research has demonstrated that even infants only a few months old comprehend relative size. If they see five objects being hidden behind a screen and then another five added to the first set, they convey surprise if they see only five when the screen is removed.

Babies also seem to be born with other innate mathematical abilities. Besides being champion estimators, they can also distinguish exact numbers—but only up to the number three or four. Dehaene was instrumental in pinpointing a brain region—a part of the parietal lobe (the intraparietal sulcus)—where numbers and approximate quantities are represented. (Put a hand on the rear portion of the top of your head to locate the parietal lobe.)

The ability to estimate group size, which also exists in dolphins, rats, pigeons, lions and monkeys, is probably an evolutionary hand-me-down that is required to gauge whether your clan should fight or flee in the face of an enemy and to ascertain which tree bears the most fruit for picking. Dehaene, along with linguist Pierre Pica of the National Center for Scientific Research in France and colleagues, discovered more evidence for this instinctive ability through work with the Mundurukú Indians in the Brazilian Amazon, a tribe that has only an elementary lexicon for numbers. Its adult members can tell whether one array of dots is bigger than another, performing the task almost as well as a French control group did, yet most are unable to answer how many objects remain when four objects are removed from a group of six.

This approximation system is a cornerstone on which more sophisticated mathematics is constructed. Any deficit in these innate capacities can spell trouble later. In the early 1990s Dehaene hypothesized that children build on their internal ballpark estimation system for more sophisticated computations as they get older. Indeed, in the years since then, a number of studies have found that impaired functioning of the primitive numerical estimation system in youngsters can predict that a child will perform poorly in arithmetic and standard math achievement tests from the elementary years onward. “We realize now that the learning of a domain such as arithmetic has to be founded on certain core knowledge that is available already in infancy,” Dehaene says.

It turns out that dyscalculia (the computational equivalent of dyslexia), which is marked by a lag in computational skills, affects 3 to 7 percent of children. Dyscalculia has received much less attention from educators than dyslexia has for reading—yet it may be just as crippling. “They earn less, spend less, are more likely to be sick, are more likely to be in trouble with the law, and need more help in school,” notes a review article that appeared in Science in May 2011.

As with language, early intervention may help. Dehaene and his team devised a simple computer game they hope will enhance mathematical ability. Called the Number Race, it exercises these basic abilities in children aged four to eight. In one version, players must choose the larger of two quantities of gold pieces before a computer-controlled opponent steals the biggest pile. The game adapts automatically to the skill of the player, and at the higher levels the child must add or subtract gold before making a comparison to determine the biggest pile. If the child wins, she advances forward a number of steps equal to the gold just won. The first player to get to the last step on the virtual playing board wins.

The open-source software, which has been translated into eight languages, makes no hyperbolic claims about the benefits of brain training. Even so, more than 20,000 teachers have downloaded the software from a government-supported research institute in Finland. Today it is being tested in several controlled studies to see whether it prevents dyscalculia and whether it helps healthy children bolster their basic number sense.

Get Ahold of Yourself

The cognitive foundations of good learning depend heavily on what psychologists call executive function, a term encompassing such cognitive attributes as the ability to be attentive, hold what you have just seen or heard in the mental scratch pad of working memory, and delay gratification. These capabilities may predict success in school and even in the working world. In 1972 a famous experiment at Stanford University—“Here's a marshmallow, and I'll give you another if you don't eat this one until I return”—showed the importance of executive function. Children who could wait, no matter how much they wanted the treat, did better in school and later in life.

In the 21st century experts have warmed to the idea of executive function as a teachable skill. An educational curriculum called Tools of the Mind has had success in some low-income school districts, where children typically do not fare as well academically compared with high-income districts. The program trains children to resist temptations and distractions and to practice tasks designed to enhance working memory and flexible thinking.

In one example of a self-regulation task, a child might tell himself aloud what to do. These techniques are potentially so powerful that in centers of higher learning, economists now contemplate public policy measures to improve self-control as a way to “enhance the physical and financial health of the population and reduce the rate of crime,” remark the authors of a study that appeared in 2011 in the Proceedings of the National Academy of Sciences USA.

Findings from neuroscience labs have bolstered that view and have revealed that the tedium of practice to resist metaphorical marshmallows may not be necessary. Music training can work as well. Echoing the Battle Hymn of the Tiger Mother, researchers are finding that assiduous practice of musical instruments may yield a payoff in the classroom—invoking shades of “tiger mom” author Amy Chua, who insisted that her daughters spend endless hours on the violin and piano. Playing an instrument may improve attention, working memory and self-control.

Some of the research providing such findings comes from a group of neuroscientists led by Nina Kraus of Northwestern University. Kraus, head of the Auditory Neuroscience Laboratory there, grew up with a diverse soundscape at home. Her mother, a classical musician, spoke to the future neuroscientist in her native Italian, and Kraus still plays the piano, guitar and drums. “I love it—it's a big part of my life,” she says, although she considers herself “just a hack musician.”

Kraus has used EEG recordings to measure how the nervous system encodes pitch, timing and timbre of musical compositions—and whether neural changes that result from practicing music improve cognitive faculties. Her lab has found that music training enhances working memory and, perhaps most important, makes students better listeners, allowing them to extract speech from the all-talking-at-once atmosphere that sometimes prevails in the classroom.

Musical training as brain tonic is still in its infancy, and a number of questions remain unanswered about exactly what type of practice enhances executive function: Does it matter whether you play the piano or guitar or whether the music was written by Mozart or the Beatles? Critically, will music classes help students who have learning difficulties or who come from low-income school districts?

But Kraus points to anecdotal evidence suggesting that music training's impact extends even to academic classes. The Harmony Project provides music education to low-income youngsters in Los Angeles. Dozens of students participating in the project have graduated from high school and gone on to college, usually the first in their family to do so.

Kraus has worked with the Harmony Project and published a study in 2014 that showed that children in one of its programs who practiced a musical instrument for two years could process sounds closely linked to reading and language skills better than children who only did so for a year. Kraus is an advocate of the guitar over brain games. “If students have to choose how to spend their time between a computer game that supposedly boosts memory or a musical instrument, there's no question, in my mind, which one is more beneficial for the nervous system,” Kraus says. “If you're trying to copy a guitar lead, you have to keep it in your head and try to reproduce it over and over.”

Hype Alert

As research continues on the brain mechanisms underlying success in the “four Rs,” three traditional ones with regulation of one's impulses as the fourth, many scientists involved with neuroeducation are taking pains to avoid overhyping the interventions they are testing. They are eager to translate their findings into practical assistance for children, but they are also well aware that the research still has a long way to go. They know, too, that teachers and parents are already bombarded by a confusing raft of untested products for enhancing learning and that some highly touted tools have proved disappointing.

In one case in point, a small industry developed several years ago around the idea that just listening to a Mozart sonata could make a baby smarter, a contention that failed to withstand additional scrutiny. Kraus's research suggests that to gain any benefit, you have to actually play an instrument, exercising auditory-processing areas of the brain: the more you practice, the more your abilities to distinguish subtleties in sound develop. Listening alone is not sufficient.

Similarly, even some of the brain-training techniques that claim to have solid scientific proof of their effectiveness have been questioned. A meta-analysis that appeared in the March 2011 issue of the Journal of Child Psychology and Psychiatryreviewed studies of perhaps the best known of all brain-training methods—software called Fast ForWord, developed by Paula A. Tallal of Rutgers, Michael Merzenich of the University of California, San Francisco, and their colleagues. The analysis found no evidence of effectiveness in helping children with language or reading difficulties. As with the methods used by Benasich, a former postdoctoral fellow with Tallal, the software attempts to improve deficits in the processing of sound that can lead to learning problems. The meta-analysis provoked a sharp rebuttal from Scientific Learning, the maker of the software, which claimed that the selection criteria were too restrictive, that most studies in the analysis were poorly implemented and that the software has been improved since the studies were conducted.

The clichéd refrain—more research is needed—applies broadly to many endeavors in neuroeducation. Dehaene's number game still needs fine adjustments before it receives wide acceptance. One controlled study showed that the game helped children compare numbers, although that achievement did not carry over into better counting or arithmetic skills. A new version is being released that the researchers hope will address these problems. Yet another finding has questioned whether music training improves executive function and thereby enhances intelligence.

In a nascent field, one study often contradicts another, only to be followed by a third that disputes the first two. This zigzag trajectory underlies all of science and at times leads to claims that overreach. In neuroeducation, teachers and parents have sometimes become the victims of advertising for “science-based” software and educational programs. “It's confusing. It's bewildering,” says Deborah Rebhuhn, a math teacher at the Center School, a special-education institution in Highland Park, N.J., that accepts students from public schools statewide. “I don't know which thing to try. And there's not enough evidence to go to the head of the school and say that something works.”

A Preschool Tune-up

Scientists who spend their days mulling over EEG wave forms and complex digital patterns in magnetic resonance imaging realize that they cannot yet offer definitive neuroscience-based prescriptions for improving learning. The work, however, is leading to a vision of what is possible, perhaps for Generation Z or its progeny. Consider the viewpoint of John D. E. Gabrieli, a professor of neuroscience participating in a collaborative program between Harvard University and the Massachusetts Institute of Technology. In a review article in Science in 2009, Gabrieli conjectured that eventually brain-based evaluation methods, combined with traditional testing, family history and perhaps genetic tests, could detect reading problems by age six and allow for intensive early intervention that might eliminate many dyslexia cases among school-aged children.

One study has already found that EEGs in kindergartners predict reading ability in fifth graders better than standard psychological measures. By undergoing brain monitoring combined with standard methods, each child might be evaluated before entering school and, if warranted, be given remedial training based on the findings that are trickling in today from neuroscience labs. If Gabrieli's vision comes to pass, brain science may imbue the notion of individualized education with a whole new meaning—one that involves enhancing the ability to learn even before a child steps foot in the classroom.

Read the original article Here

- Comments (0)

- Brain Connectivity Related to Epilepsy

- By Jason von Stietz

- December 19, 2014

-

Getty Images Recent findings of researchers at University of Exeter could refine diagnostic procedures for a common form of epilepsy. Researchers investigated the use of mathematical modeling to assess an individual’s EEG for susceptibility to idiopathic generalized epilepsy (IGE) and found brain connectivity to be an important factor. The study was discussed in a recent article of NeuroScientistNews:

Current diagnosis practices typically observe electrical activities associated with seizures in a clinical environment.

The ground-breaking research has revealed differences in the way that distant regions of the brain connect with each other and how these differences may lead to the generation of seizures in people with IGE.

By using computer algorithms and mathematical models, the research team, led by Professor John Terry, was able to develop systems to analyse EEG recordings gathered while the patient is at rest, and reveal subtle differences in dynamic network properties that enhance susceptibility to seizures.

Professor Terry said: "Our research offers the fascinating possibility of a revolution in diagnosis for people with epilepsy.

"It would move us from diagnosis based on a qualitative assessment of easily observable features, to one based on quantitative features extracted from routine clinical recordings.

"Not only would this remove risk to people with epilepsy, but also greatly speed up the process, since only a few minutes of resting state data would need to be collected in each case."

The pivotal research has been published in a recent series of papers, the most recent of which has been published online in the scientific journal Frontiers in Neurology.

In their first study, EEG recordings were used to build a picture of how different regions of the brain were connected, based upon the level of synchrony between them. The collaborative team of researchers from Exeter and Kings College, London, then used a suite of mathematical tools to characterise these large-scale resting brain networks, observing that in people with IGE these networks were relatively over-connected, in contrast to healthy controls.

They further observed a similar finding in first-degree relatives of this cohort of people with IGE; an intriguing finding which suggests that brain network alterations are an endophenotype of the condition - an inherited and necessary, but not sufficient, condition for epilepsy to occur.

In a second study the team sought to understand how these alterations in large-scale brain networks could heighten susceptibility to recurrent seizures and thus the condition epilepsy. The study revealed two intriguing findings - firstly, the level of communication between regions that would lead to their activity becoming synchronised was lower in the brain networks of people with IGE, suggesting a possible mechanism of seizure initiation; and further that altering the activity in specific left frontal brain regions of the computational model could more easily lead to synchronous activity throughout the whole network.

In their most recent study, researchers have introduced the concept of "Brain Network Ictogenecity" (or BNI) as a probabilistic measure for a given network to generate seizures. They found that the brain networks of people with IGE had a high BNI, whereas the brain networks of healthy controls had a low BNI.

Professor Terry said: "Our most recent analyses have revealed that the average connectedness of each brain region is key to creating an environment where seizures can thrive.

This feature appropriately discriminates between cohorts of people with idiopathic generalized epilepsies and healthy control subjects. The next critical state of our research is to validate in a prospective study the predictive power of these findings."

Read the original article Here

- Comments (0)

- EEG Patterns Can Inform Effective Use of ADHD Medications

- By Jason von Stietz

- August 15, 2014

-

Jay Gunkelman, QEEG Diplomate and Chief Science Officer of Brain Science International, was recently interviewed regarding EEG patterns and the effective use of ADHD medications. Gunkelman discussed how a patient's raw EEG and QEEG could give physicians valuable insight into the possible effectiveness of different ADHD medications. Gunkelman's discussion of medication usage was covered in an article in HCP Live:

While medication is usually the first-line choice, Gunkleman said other treatment options like neurofeedback and neuromodulation are often overlooked. Presently, some of the most popular options include stimulants, amphetamine-related norepinephrine (NE) agonists, or “nonstimulant” NE-reuptake inhibitors, he continued.

“The medication intervention is hypothesized to operate through an increased engagement of the mirror neuron system, as reflected in the related EEG rhythm: Mu,” Gunkelman noted. “Mu is a normal EEG variant in the alpha frequency band, which can be seen in the EEG bicentrally in the absence of movement, intention to move, or even ‘engagement.’ ”

With so many available options, Gunkleman said it can be difficult for doctors to find the right treatment for a particular ADHD patient. Nevertheless, “the real trick is picking the right one the first time, or at least avoiding the obvious contraindications,” he noted.

During that long and tenuous process, Gunkleman said physicians might try to mix a variety of medications; however, that method increases the risk of side effects, which he said is “especially true if drugs are mismatched with the client’s underlying neurophysiological profile.”

In describing the risks of the “try one” method, Gunkleman cited statistics from the Star-D study that showed only a 38.6% initial trial efficacy for depression in a field of more than 3,000 patients. After a fourth set of trials, 33% of participants still complained of clinical depression, he said.

“Don’t dive into the water unless you know what is under the surface,” Gunkleman warned. “If clinical practitioners wish to ‘look’ before they just try one of this long list of medications, then they should look at the brain’s function prior to prescribing a medication to treat a client.”

Examining EEG results can be a key factor in anticipating how patients will respond to prescribed medications, Gunkleman noted. Potential observations can include excessive frontal theta, frontal slower frequency alpha, and frontal age-appropriate frequency alpha, in addition to beta spindles and paroxysmal or epileptiform discharges.

“All of these patterns can disturb the frontal lobe’s function, resulting in the same behavioral manifestation of the multiple physiological patterns, each representing a very different pathophysiology and predicting very different pharmacotheraputic approaches,” Gunkleman explained.

Gunkleman also demonstrated a “lock and key” system for matching proper medications with EEG readings. Among various situations, he suggested prescribing methylpheneidate for patients who have a frontal theta pattern, as well as those with slower-frequency alpha readings who need more NE released in their prescriptions. However, when that method is not used or is unsuccessful, the author warned of rapid withdrawal symptoms in patients that could cause significant side effects such as dizziness, nausea, insomnia, anxiety, and even paresthesias. Depending on the type of medication, other possible side effects include stomach issues, mood instability, and sleep disturbances.

Read the Full Article Here

- Comments (1)

- Brainwaves Can Predict Audience Reaction

- By Jason von Stietz

- July 31, 2014

-

Photo Credit: Getty Images Could EEG more accurately predict the general population's response to movies or television programming than self-reports? Researchers at The City College of New York found EEG to be a strong predicter. Finding's indicated that high agreement in study participants' EEG signified a positive response to what was being viewed. In contrast, low agreement in EEG indicated less enjoyment by the participants. Medical Xpress discussed the study in a recent article:

By analyzing the brainwaves of 16 individuals as they watched mainstream television content, researchers were able to accurately predict the preferences of large TV audiences, up to 90 % in the case of Super Bowl commercials. The findings appear in a paper entitled, "Audience Preferences Are Predicted by Temporal Reliability of Neural Processing," published July 29, 2014, in Nature Communications.

"Alternative methods, such as self-reports are fraught with problems as people conform their responses to their own values and expectations," said Dr. Jacek Dmochowski, lead author of the paper and a postdoctoral fellow at City College during the research. However, brain signals measured using electroencephalography (EEG) can, in principle, alleviate this shortcoming by providing immediate physiological responses immune to such self-biasing. "Our findings show that these immediate responses are in fact closely tied to the subsequent behavior of the general population," he added.

Dr. Lucas Parra, Herbert Kayser Professor of Biomedical Engineering in CCNY's Grove School of Engineering and the paper's senior author explained that: "When two people watch a video, their brains respond similarly – but only if the video is engaging. Popular shows and commercials draw our attention and make our brainwaves very reliable; the audience is literally 'in-sync'."

In the study, participants watched scenes from "The Walking Dead" TV show and several commercials from the 2012 and 2013 Super Bowls. EEG electrodes were placed on their heads to capture brain activity. The reliability of the recorded neural activity was then compared to audience reactions in the general population using publicly available social media data provided by the Harmony Institute and ratings from the "USA Today's" Super Bowl Ad Meter.

"Brain activity among our participants watching "The Walking Dead" predicted 40% of the associated Twitter traffic," said Professor Parra. "When brainwaves were in agreement, the number of tweets tended to increase." Brainwaves also predicted 60% of the Nielsen Ratings that measure the size of a TV audience.

The study was even more accurate (90 percent) when comparing preferences for Super Bowl ads. For instance, researchers saw very similar brainwaves from their participants as they watched a 2012 Budweiser commercial that featured a beer-fetching dog. The general public voted the ad as their second favorite that year. The study found little agreement in the brain activity among participants when watching a GoDaddy commercial featuring a kissing couple. It was among the worst rated ads in 2012.

Read the Full Article Here

- Comments (0)

Subscribe to our Feed via RSS

Subscribe to our Feed via RSS