Blog

- Brain Computer Interface Generates Touch Sensation For Paralyzed Man

- By Jason von Stietz, M.A.

- October 21, 2016

-

Credit: UPMC/Pitt Healt Sciences Media Relations The sensation of touch is a critical aspect in everyday functioning. However, until recently prosthetic limbs have been unable to recreate the sense of touch for their users. Researcher at the University of Pittsburgh recently examined the use of microelectrodes implanted into the somatosensory cortex of a person with a spinal cord injury, which generate sensations of touch perceived as coming from the person’s own paralyzed limb. The study was discussed in a recent article in Medical Xpress:

Imagine being in an accident that leaves you unable to feel any sensation in your arms and fingers. Now imagine regaining that sensation, a decade later, through a mind-controlled robotic arm that is directly connected to your brain.

That is what 28-year-old Nathan Copeland experienced after he came out of brain surgery and was connected to the Brain Computer Interface (BCI), developed by researchers at the University of Pittsburgh and UPMC. In a study published online today in Science Translational Medicine, a team of experts led by Robert Gaunt, Ph.D., assistant professor of physical medicine and rehabilitation at Pitt, demonstrated for the first time ever in humans a technology that allows Mr. Copeland to experience the sensation of touch through a robotic arm that he controls with his brain.

"The most important result in this study is that microstimulation of sensory cortex can elicit natural sensation instead of tingling," said study co-author Andrew B. Schwartz, Ph.D., distinguished professor of neurobiology and chair in systems neuroscience, Pitt School of Medicine, and a member of the University of Pittsburgh Brain Institute. "This stimulation is safe, and the evoked sensations are stable over months. There is still a lot of research that needs to be carried out to better understand the stimulation patterns needed to help patients make better movements."

This is not the Pitt-UPMC team's first attempt at a BCI. Four years ago, study co-author Jennifer Collinger, Ph.D., assistant professor, Pitt's Department of Physical Medicine and Rehabilitation, and research scientist for the VA Pittsburgh Healthcare System, and the team demonstrated a BCI that helped Jan Scheuermann, who has quadriplegia caused by a degenerative disease. The video of Scheuermann feeding herself chocolate using the mind-controlled robotic arm was seen around the world. Before that, Tim Hemmes, paralyzed in a motorcycle accident, reached out to touch hands with his girlfriend.

But the way our arms naturally move and interact with the environment around us is due to more than just thinking and moving the right muscles. We are able to differentiate between a piece of cake and a soda can through touch, picking up the cake more gently than the can. The constant feedback we receive from the sense of touch is of paramount importance as it tells the brain where to move and by how much.

For Dr. Gaunt and the rest of the research team, that was the next step for the BCI. As they were looking for the right candidate, they developed and refined their system such that inputs from the robotic arm are transmitted through a microelectrode array implanted in the brain where the neurons that control hand movement and touch are located. The microelectrode array and its control system, which were developed by Blackrock Microsystems, along with the robotic arm, which was built by Johns Hopkins University's Applied Physics Lab, formed all the pieces of the puzzle.

In the winter of 2004, Mr. Copeland, who lives in western Pennsylvania, was driving at night in rainy weather when he was in a car accident that snapped his neck and injured his spinal cord, leaving him with quadriplegia from the upper chest down, unable to feel or move his lower arms and legs, and needing assistance with all his daily activities. He was 18 and in his freshman year of college pursuing a degree in nanofabrication, following a high school spent in advanced science courses.

He tried to continue his studies, but health problems forced him to put his degree on hold. He kept busy by going to concerts and volunteering for the Pittsburgh Japanese Culture Society, a nonprofit that holds conventions around the Japanese cartoon art of anime, something Mr. Copeland became interested in after his accident.

Right after the accident he had enrolled himself on Pitt's registry of patients willing to participate in clinical trials. Nearly a decade later, the Pitt research team asked if he was interested in participating in the experimental study.

After he passed the screening tests, Nathan was wheeled into the operating room last spring. Study co-investigator and UPMC neurosurgeon Elizabeth Tyler-Kabara, M.D., Ph.D., assistant professor, Department of Neurological Surgery, Pitt School of Medicine, implanted four tiny microelectrode arrays each about half the size of a shirt button in Nathan's brain. Prior to the surgery, imaging techniques were used to identify the exact regions in Mr. Copeland's brain corresponding to feelings in each of his fingers and his palm.

"I can feel just about every finger—it's a really weird sensation," Mr. Copeland said about a month after surgery. "Sometimes it feels electrical and sometimes its pressure, but for the most part, I can tell most of the fingers with definite precision. It feels like my fingers are getting touched or pushed."

At this time, Mr. Copeland can feel pressure and distinguish its intensity to some extent, though he cannot identify whether a substance is hot or cold, explains Dr. Tyler-Kabara.

Michael Boninger, M.D., professor of physical medicine and rehabilitation at Pitt, and senior medical director of post-acute care for the Health Services Division of UPMC, recounted how the Pitt team has achieved milestone after milestone, from a basic understanding of how the brain processes sensory and motor signals to applying it in patients

"Slowly but surely, we have been moving this research forward. Four years ago we demonstrated control of movement. Now Dr. Gaunt and his team took what we learned in our tests with Tim and Jan—for whom we have deep gratitude—and showed us how to make the robotic arm allow its user to feel through Nathan's dedicated work," said Dr. Boninger, also a co-author on the research paper.

Dr. Gaunt explained that everything about the work is meant to make use of the brain's natural, existing abilities to give people back what was lost but not forgotten.

"The ultimate goal is to create a system which moves and feels just like a natural arm would," says Dr. Gaunt. "We have a long way to go to get there, but this is a great start."

Read the original article Here

- Comments (0)

- Walking In Nature Leads To Measurable Brain Changes

- By Jason von Stietz, M.A.

- September 25, 2016

-

Getty Images People often find a walk in nature to be a relaxing way of letting go of their stressful thoughts. However, do these walks have a measurable effect on the brain? Researchers at Stanford University examined the relationship between walking in nature and activity in the subgenual prefrontal cortex, a part of the brain related to ruminating thoughts. The study was discussed in an article of The New York Times:

Most of us today live in cities and spend far less time outside in green, natural spaces than people did several generations ago.

City dwellers also have a higher risk for anxiety, depression and other mental illnesses than people living outside urban centers, studies show.

These developments seem to be linked to some extent, according to a growing body of research. Various studies have found that urban dwellers with little access to green spaces have a higher incidence of psychological problems than people living near parks and that city dwellers who visit natural environments have lower levels of stress hormones immediately afterward than people who have not recently been outside.

But just how a visit to a park or other green space might alter mood has been unclear. Does experiencing nature actually change our brains in some way that affects our emotional health?

That possibility intrigued Gregory Bratman, a graduate student at the Emmett Interdisciplinary Program in Environment and Resources at Stanford University, who has been studying the psychological effects of urban living. In an earlier study published last month, he and his colleagues found that volunteers who walked briefly through a lush, green portion of the Stanford campus were more attentive and happier afterward than volunteers who strolled for the same amount of time near heavy traffic.

But that study did not examine the neurological mechanisms that might underlie the effects of being outside in nature.

So for the new study, which was published last week in Proceedings of the National Academy of Sciences, Mr. Bratman and his collaborators decided to closely scrutinize what effect a walk might have on a person’s tendency to brood.

Brooding, which is known among cognitive scientists as morbid rumination, is a mental state familiar to most of us, in which we can’t seem to stop chewing over the ways in which things are wrong with ourselves and our lives. This broken-record fretting is not healthy or helpful. It can be a precursor to depression and is disproportionately common among city dwellers compared with people living outside urban areas, studies show.

Perhaps most interesting for the purposes of Mr. Bratman and his colleagues, however, such rumination also is strongly associated with increased activity in a portion of the brain known as the subgenual prefrontal cortex.

If the researchers could track activity in that part of the brain before and after people visited nature, Mr. Bratman realized, they would have a better idea about whether and to what extent nature changes people’s minds.

Mr. Bratman and his colleagues first gathered 38 healthy, adult city dwellers and asked them to complete a questionnaire to determine their normal level of morbid rumination.

The researchers also checked for brain activity in each volunteer’s subgenual prefrontal cortex, using scans that track blood flow through the brain. Greater blood flow to parts of the brain usually signals more activity in those areas.

Then the scientists randomly assigned half of the volunteers to walk for 90 minutes through a leafy, quiet, parklike portion of the Stanford campus or next to a loud, hectic, multi-lane highway in Palo Alto. The volunteers were not allowed to have companions or listen to music. They were allowed to walk at their own pace.

Immediately after completing their walks, the volunteers returned to the lab and repeated both the questionnaire and the brain scan.

As might have been expected, walking along the highway had not soothed people’s minds. Blood flow to their subgenual prefrontal cortex was still high and their broodiness scores were unchanged.

But the volunteers who had strolled along the quiet, tree-lined paths showed slight but meaningful improvements in their mental health, according to their scores on the questionnaire. They were not dwelling on the negative aspects of their lives as much as they had been before the walk.

They also had less blood flow to the subgenual prefrontal cortex. That portion of their brains were quieter.

These results “strongly suggest that getting out into natural environments” could be an easy and almost immediate way to improve moods for city dwellers, Mr. Bratman said.

But of course many questions remain, he said, including how much time in nature is sufficient or ideal for our mental health, as well as what aspects of the natural world are most soothing. Is it the greenery, quiet, sunniness, loamy smells, all of those, or something else that lifts our moods? Do we need to be walking or otherwise physically active outside to gain the fullest psychological benefits? Should we be alone or could companionship amplify mood enhancements?

“There’s a tremendous amount of study that still needs to be done,” Mr. Bratman said.

But in the meantime, he pointed out, there is little downside to strolling through the nearest park, and some chance that you might beneficially muffle, at least for awhile, your subgenual prefrontal cortex.

Read the original article Here

- Comments (0)

- Neurofeedback Training of Amygdala Increases Emotional Regulation

- By Jason von Stietz, M.A.

- September 18, 2016

-

Getty Images The amygdala plays a key role in emotional regulation. Researchers from Tel-Aviv University examined the impact of neurofeedback aimed at reducing EEG activity in the amygdala on emotional regulation in healthy participants. The study was discussed in a recent article in NeuroScientistNews:

Training the brain to treat itself is a promising therapy for traumatic stress. The training uses an auditory or visual signal that corresponds to the activity of a particular brain region, called neurofeedback, which can guide people to regulate their own brain activity.

However, treating stress-related disorders requires accessing the brain's emotional hub, the amygdala, which is located deep in the brain and difficult to reach with typical neurofeedback methods. This type of activity has typically only been measured using functional magnetic resonance imaging (fMRI), which is costly and poorly accessible, limiting its clinical use.

A study published in Biological Psychiatry tested a new imaging method that provided reliable neurofeedback on the level of amygdala activity using electroencephalography (EEG), and allowed people to alter their own emotional responses through self-regulation of its activity.

"The major advancement of this new tool is the ability to use a low-cost and accessible imaging method such as EEG to depict deeply located brain activity," said both senior author Dr. Talma Hendler of Tel-Aviv University in Israel and The Sagol Brain Center at Tel Aviv Sourasky Medical Center, and first author Jackob Keynan, a PhD student in Hendler's laboratory, in an email toBiological Psychiatry.

The researchers built upon a new imaging tool they had developed in a previous study that uses EEG to measure changes in amygdala activity, indicated by its "electrical fingerprint". With the new tool, 42 participants were trained to reduce an auditory feedback corresponding to their amygdala activity using any mental strategies they found effective.

During this neurofeedback task, the participants learned to modulate their own amygdala electrical activity. This also led to improved downregulation of blood-oxygen level dependent signals of the amygdala, an indicator of regional activation measured with fMRI.

In another experiment with 40 participants, the researchers showed that learning to downregulate amygdala activity could actually improve behavioral emotion regulation. They showed this using a behavioral task invoking emotional processing in the amygdala. The findings show that with this new imaging tool, people can modify both the neural processes and behavioral manifestations of their emotions.

"We have long known that there might be ways to tune down the amygdala through biofeedback, meditation, or even the effects of placebos," said John Krystal, Editor of Biological Psychiatry. "It is an exciting idea that perhaps direct feedback on the level of activity of the amygdala can be used to help people gain control of their emotional responses."

The participants in the study were healthy, so the tool still needs to be tested in the context of real-life trauma. However, according to the authors, this new method has huge clinical implications.

The approach "holds the promise of reaching anyone anywhere," said Hendler and Keynan. The mobility and low cost of EEG contribute to its potential for a home-stationed bedside treatment for recent trauma patients or for stress resilience training for people prone to trauma.

Read the original article Here.

- Comments (2)

- Ultrasound Used to "Jumpstart" Patient's Brain

- By Jason von Stietz, M.A.

- September 9, 2016

-

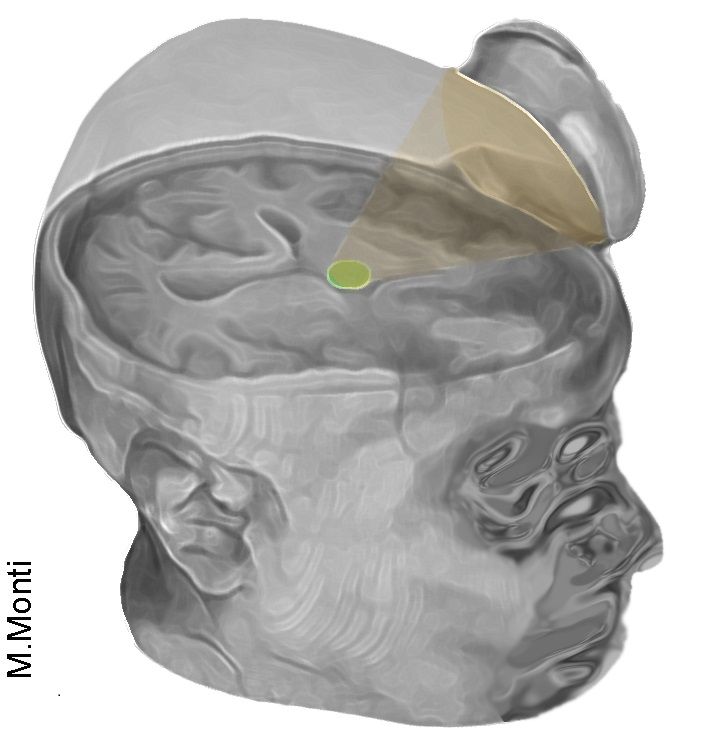

Photo Credit: Martin Monti/UCLA Researchers at UCLA used examined the use of ultrasound to stimulate the brain of a 25-year-old man in a coma. The treatment involved directing low-intensity acoustic energy to the patient’s thalamus, which is significantly underactivated in coma patients. The study was discussed in a recent article in Medical Xpress:

The technique uses sonic stimulation to excite the neurons in the thalamus, an egg-shaped structure that serves as the brain's central hub for processing information.

"It's almost as if we were jump-starting the neurons back into function," said Martin Monti, the study's lead author and a UCLA associate professor of psychology and neurosurgery. "Until now, the only way to achieve this was a risky surgical procedure known as deep brain stimulation, in which electrodes are implanted directly inside the thalamus," he said. "Our approach directly targets the thalamus but is noninvasive."

Monti said the researchers expected the positive result, but he cautioned that the procedure requires further study on additional patients before they determine whether it could be used consistently to help other people recovering from comas.

"It is possible that we were just very lucky and happened to have stimulated the patient just as he was spontaneously recovering," Monti said.

A report on the treatment is published in the journal Brain Stimulation. This is the first time the approach has been used to treat severe brain injury.

The technique, called low-intensity focused ultrasound pulsation, was pioneered by Alexander Bystritsky, a UCLA professor of psychiatry and biobehavioral sciences in the Semel Institute for Neuroscience and Human Behavior and a co-author of the study. Bystritsky is also a founder of Brainsonix, a Sherman Oaks, California-based company that provided the device the researchers used in the study.

That device, about the size of a coffee cup saucer, creates a small sphere of acoustic energy that can be aimed at different regions of the brain to excite brain tissue. For the new study, researchers placed it by the side of the man's head and activated it 10 times for 30 seconds each, in a 10-minute period.

Monti said the device is safe because it emits only a small amount of energy—less than a conventional Doppler ultrasound.

Before the procedure began, the man showed only minimal signs of being conscious and of understanding speech—for example, he could perform small, limited movements when asked. By the day after the treatment, his responses had improved measurably. Three days later, the patient had regained full consciousness and full language comprehension, and he could reliably communicate by nodding his head "yes" or shaking his head "no." He even made a fist-bump gesture to say goodbye to one of his doctors.

"The changes were remarkable," Monti said.The technique targets the thalamus because, in people whose mental function is deeply impaired after a coma, thalamus performance is typically diminished. And medications that are commonly prescribed to people who are coming out of a coma target the thalamus only indirectly.

Under the direction of Paul Vespa, a UCLA professor of neurology and neurosurgery at the David Geffen School of Medicine at UCLA, the researchers plan to test the procedure on several more people beginning this fall at the Ronald Reagan UCLA Medical Center. Those tests will be conducted in partnership with the UCLA Brain Injury Research Center and funded in part by the Dana Foundation and the Tiny Blue Dot Foundation.

If the technology helps other people recovering from coma, Monti said, it could eventually be used to build a portable device—perhaps incorporated into a helmet—as a low-cost way to help "wake up" patients, perhaps even those who are in a vegetative or minimally conscious state. Currently, there is almost no effective treatment for such patients, he said.

Read the original article Here

- Comments (0)

- The Brain of a Musician: Case study of Sting

- By Jason von Stietz, M.A.

- August 27, 2016

-

Photo Credit: Owen Egan What does musical ability look like in the brain? Researchers at McGill University were presented with a unique opportunity. In an effort to understand how a musician’s brain operates, they were able to perform brain imaging on multiple Grammy Award winning artist Sting. The study was discussed in a recent article NeuroScientistNews:

What does the 1960s Beatles hit "Girl" have in common with Astor Piazolla's evocative tango composition "Libertango?"

Probably not much, to the casual listener. But in the mind of one famously eclectic singer-songwriter, the two songs are highly similar. That's one of the surprising findings of an unusual neuroscience study based on brain scans of the musician Sting.

The paper, published in the journal Neurocase, uses recently developed imaging-analysis techniques to provide a window into the mind of a masterful musician. It also represents an approach that could offer insights into how gifted individuals find connections between seemingly disparate thoughts or sounds, in fields ranging from arts to politics or science.

"These state-of the-art techniques really allowed us to make maps of how Sting's brain organizes music," says lead author Daniel Levitin, a cognitive psychologist at McGill University. "That's important because at the heart of great musicianship is the ability to manipulate in one's mind rich representations of the desired soundscape."

Lab tour with a twist

The research stemmed from a serendipitous encounter several years ago. Sting had read Levitin's book This Is Your Brain on Music and was coming to Montreal to play a concert. His representatives contacted Levitin and asked if he might take a tour of the lab at McGill. Levitin—whose lab has hosted visits from many popular musicians over the years—readily agreed. And he added a unique twist: "I asked if he also wanted to have his brain scanned. He said 'yes'."

So it was that McGill students in the Stewart Biology Building one day found themselves sharing an elevator with the former lead singer of The Police, who has won 16 Grammy Awards, including one in 1982 for the hit single "Don't Stand So Close To Me."

Both functional and structural scans were conducted in a single session at the brain imaging unit of McGill's Montreal Neurological Institute, the hot afternoon before a Police concert. A power outage knocked the entire campus off-line for several hours, threatening to cancel the experiment. Because it takes over an hour to reboot the fMRI machine, time grew short. Sting generously agreed to skip his soundcheck in order to do the scan.

Levitin then teamed up with Scott Grafton, a leading brain-scan expert at the University of California at Santa Barbara, to use two novel techniques to analyze the scans. The techniques, known as multivoxel pattern analysis and representational dissimilarity analysis, showed which songs Sting found similar to one another and which ones are dissimilar—based not on tests or questionnaires, but on activations of brain regions.

"At the heart of these methods is the ability to test if patterns of brain activity are more alike for two similar styles of music compared to different styles. This approach has never before been considered in brain imaging experiments of music," notes Scott Grafton.

Unexpected connections

"Sting's brain scan pointed us to several connections between pieces of music that I know well but had never seen as related before," Levitin says. Piazzola's "Libertango" and the Beatles' "Girl" proved to be two of the most similar. Both are in minor keys and include similar melodic motifs, the paper reveals. Another example: Sting's own "Moon over Bourbon Street" and Booker T. and the MG's "Green Onions," both of which are in the key of F minor, have the same tempo (132 beats per minute) and a swing rhythm.

The methods introduced in this paper, Levitin says, "can be used to study all sorts of things: how athletes organize their thoughts about body movements; how writers organize their thoughts about characters; how painters think about color, form and space."

Read the original article Here

- Comments (0)

- The Myth of the Second Head Trauma Cure

- By Jason von Stietz, M.A.

-

According to cartoons and other popular media, the only cure for head trauma induced amnesia is a second head trauma. When a cartoon characters such as Fred Flintsone loses his memory following a lump on the head, it is only a second lump that brings it back. This idea seems silly to modern scientists and clinicians. However, where did this myth come from? The history of this myth was discussed in a recent article in Drexel Now:

While that worked in “The Flintstones” world and many other fictional realms, the medical community knows that like doesn’t cure like when it comes to head trauma.

However, a shockingly high level of the general public endorse the Flintstones solution, with 38–46 percent believing that a second blow to the head could cure amnesia, according to Drexel’s Mary Spiers. And, believe it or not, that belief was spurred by members of the medical community dating as far back as the early 19th century.

Spiers, PhD, associate professor in the College of Arts and Sciences’ Department of Psychology, traced the origins of the double-trauma amnesia cure belief in a paper for Neurology titled, “The Head Trauma Amnesia Cure: The Making of a Medical Myth.”

For a long time, scientists worked to figure out why the brain had two hemispheres.

“Studying the brain in the past was very challenging for several reasons,” Spiers explained. “There was no way to look into the living brain, as powerful functional imaging now allows us to do. Also, many people, including physicians, philosophers and those in the arts, speculated about the function of the brain, the soul and consciousness, so there were many competing ideas.”

At one point, scientists landed on the idea that it was a double organ, like a person’s eyes or ears, two pieces that were redundant — doing the same work.

Around the turn of the 19th century, a French scientist named Francois Xavier Bichat decided that the two hemispheres acted in synchrony. One side mirrored the other, and vice versa.

As such, he reasoned that an injury to one side of the head would throw off the other, “untouched” side.

“[Bichat] seriously proposed the notion that a second blow could restore the wits of someone who had a previous concussion,” Spiers wrote in her paper. “Bichat justified this idea by reasoning that hemispheres that are in balance with each other functioned better, while those out of balance cause perceptual and intellectual confusion.”

Bichat never cited any specific cases to back up his theory and, ironically enough, he died of a probable head injury in 1802.

“From my reading of Bichat’s work, it seems that he felt that the second trauma amnesia cure was a common occurrence and didn’t need the citation of an individual case,” Spiers said. “This was not unusual at the time, to forgo evidence like that.”

Despite backup to his claims, Bichat’s ideas continued on after his death and became known as Bichat’s Law of Symmetry. Books in the following decades cited brain asymmetry as the root of different mental health issues.

Compounding the symmetry idea was also the dominant thought that human memories could never be lost. However, Samuel Taylor Coleridge — a philosopher, not a physician — was credited with popularizing that idea.

It wasn’t until the mid-1800s that scientists began to realize that taking a hit to the head might just destroy memories completely. A second blow wasn’t likely to jump-start the brain, they realized, but create further damage.

By this time, however, enough anecdotes about curing amnesia with a second head trauma were floating around from otherwise respectable scientists that the theory invaded the general public’s consciousness. With “no hard and fast lines between scientific and popular writing,” myths like the second trauma amnesia cure circulated out of control, according to Spiers.

Even as modern scientists began to fully understand the brain, the theory still stuck with a large amount of the public, resulting in the lumps we continue to see on cartoon characters’ heads.

“One of the issues we see in the persistence of this myth is that understanding how the brain forgets, recovers and/or loses information is a complicated matter that is still being studied by brain scientists,” Spiers said. “As individuals, we may have had the experience of a ‘memory jog’ or cue that reminds us of a long-forgotten memory. Because our own experiences serve as powerful evidence to us, this reinforces the myth that all memories are forever stored in the brain and only need some sort of jolt to come back.”

But, obviously, that jolt isn’t exactly advisable.

“In the case of a traumatic brain injury, learning and memory may be temporarily or permanently impaired due to swelling and injured or destroyed neurons,” Spiers concluded. “Some memories may return as the brain recovers, but a second brain injury is never a good treatment for a first brain injury.”

Read the original Here

- Comments (0)

- Study Identifies Biomarkers for Alzheimer's Disease

- By Jason von Stietz, M.A.

- August 15, 2016

-

.jpg)

Getty Images Researchers at the University of Wisconsin Madison have identified biomarkers helpful in predicting the development of Alzheimer’s disease. The researchers analyzed the data of 175, which included brain scans, measures of cognitive abilities, and genotyping. The study was discussed in a recent article in NeuroscientistNews:

This approach – which statistically analyzes a panel of biomarkers – could help identify people most likely to benefit from drugs or other interventions to slow the progress of the disease. The study was published in the August edition of the journal Brain.

"The Alzheimer's Association estimates that if we had a prevention that merely pushed back the clinical symptoms of the disease by five years, it would almost cut in half the number of people projected to develop dementia,'' says Annie Racine, a doctoral candidate and the study's lead author. "That translates into millions of lives and billions of dollars saved."

Dr. Sterling Johnson, the study's senior author, says that while brain changes – such as the buildup of beta-amyloid plaques and tangles of another substance called tau – are markers of the disease, not everyone with these brain changes develops Alzheimer's symptoms.

"Until now, we haven't had a great way to use the biomarkers to predict who was going to develop clinical symptoms of the disease,'' Johnson says. "Although the new algorithm isn't perfect, now we can say with greater certainty who is at increased risk and more likely to decline."

The research team recruited 175 older adults at risk for Alzheimer's disease, and used statistical algorithms to categorize them into four clusters based on different patterns and profiles of pathology in their brains. Then, the researchers analyzed cognitive data from the participants to investigate whether these cluster groups differed on their cognitive abilities measured over as many as 10 years.

As it turns out, the biomarker panels were predictive of cognitive decline over time. One cluster in particular stood out. The group that had a biomarker profile consistent with Alzheimer's – abnormal levels of tau and beta-amyloid in their cerebrospinal fluid – showed the steepest decline on cognitive tests of memory and language over the 10 years of testing. About two-thirds of the 22 people sorted into this group were also positive for the APOE4 gene—the greatest known risk factor for sporadic Alzheimer's disease—compared with about one-third in the other clusters.

At the other end of the spectrum, the largest group, 76 people, were sorted into a cluster that appears to be made up of healthy aging individuals. They showed normal levels on the five biomarkers and did not decline cognitively over time.

In between, there were two clusters that weren't classified as Alzheimer's but who don't seem to be aging normally either. A group of 32 people showed signs of mixed Alzheimer's and vascular dementia. They had some of the amyloid changes in their cerebrospinal fluid, but also showed lesions in their brains' white matter, which indicate scarring from small ischemic lesions which can be thought of as minor silent strokes.

The other cluster of 45 people had signs of brain atrophy, with brain imaging showing that the hippocampus, the brain's memory center, was significantly smaller than the other groups. The authors speculate this group could have intrinsic brain vulnerability or could be affected by some other process that differentiates them from healthy aging. Both the in-between clusters showed non-specific decline on a test of global cognitive functioning, which further differentiates them from the healthy aging cluster.

The study participants came from a group of more than 1,800 people enrolled in two studies – the Wisconsin Alzheimer's Disease Research Center (WADRC) study and the Wisconsin Registry for Alzheimer's Prevention (WRAP). Both groups are enriched for people at risk for getting Alzheimer's because about ¾ of participants have a parent with the disease.

"This study shows that just having a family history doesn't mean you are going to get this disease," Johnson says. "Some of the people in our studies are on a trajectory for Alzheimer's, but more of them are aging normally, and some are on track to have a different type of brain disease." A comprehensive panel of biomarkers – such as the one evaluated in this study – could help to predict those variable paths, paving the way for early interventions to stop or slow the disease.

The authors of the study are affiliated with the WADRC, the Wisconsin Alzheimer's Institute, the Institute on Aging, the Waisman Center, and the Neuroscience and Public Policy program, all at UW-Madison; and the Geriatrics Research Education and Clinical Center at the William S. Middleton Veterans Hospital.

Read the original Here

- Comments (0)

- New Imaging Tool Measures Synaptic Density

- By Jason von Stietz, M.A.

- July 29, 2016

-

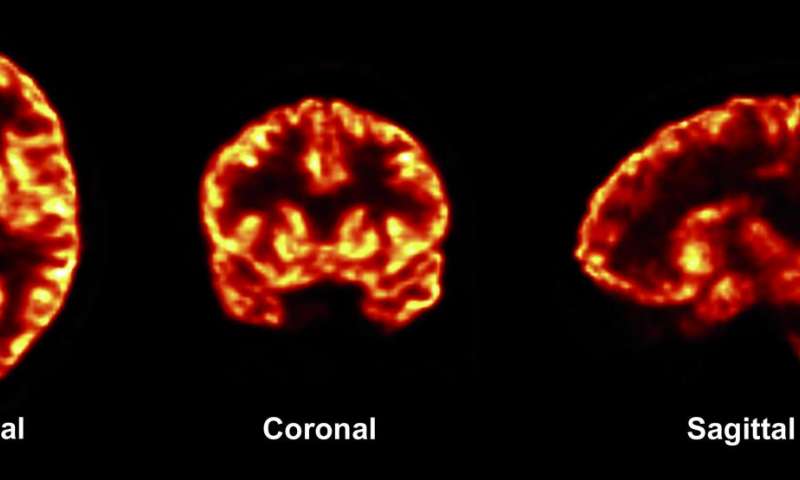

Photo Credit: Finemma et al. (2016). Science Translational Medicine Previously researcher have only been able to study the synaptic changes caused by brain disorders through autopsy. However, recently researcher from Yale have developed a new approach to brain scanning that allows for the measurement synaptic density. The study was discussed in a recent article in Medical Xpress:

The study was published July 20 in Science Translational Medicine.

Certain changes in synapses—the junctions between nerve cells in the brain—have been linked with brain disorders. But researchers have only been able to evaluate synaptic changes during autopsies. For their study, the research team set out to develop a method for measuring the number of synapses, or synaptic density, in the living brain.

To quantify synapses throughout the brain, professor of radiology and biomedical imaging Richard Carson and his coauthors combined PET scanning technology with biochemistry. They developed a radioactive tracerthat, when injected into the body, binds with a key protein that is present in all synapses across the brain. They observed the tracer through PET imaging and then applied mathematical tools to quantify synaptic density.

The researchers used the imaging technique in both baboons and humans. They confirmed that the new method did serve as a marker for synaptic density. It also revealed synaptic loss in three patients with epilepsy compared to healthy individuals.

"This is the first time we have synaptic density measurement in live human beings," said Carson, who is senior author on the study. "Up to now any measurement of synaptic density was postmortem."

The finding has several potential applications. With this noninvasive method, researchers may be able to follow the progression of many brain disorders, including epilepsy and Alzheimer's disease, by measuring changes in synaptic density over time. Another application may be in assessing how pharmaceuticals slow the loss of neurons. "This opens the door to follow the natural evolution of synaptic density with normal aging and follow how drugs can alter synapses or synapse formation."

Carson and his colleagues plan future studies involving PET imaging of synapses to research epilepsy and other brain disorders, including Alzheimer's disease, schizophrenia, depression, and Parkinson's disease. "There are many diseases where neuro-degeneration comes into play," he noted.

Read the original article Here

- Comments (0)

- Impact Of Baby's Cries on Cognition

- By Jason von Stietz, M.A.

- July 20, 2016

-

Getty Images People often refer to “parental instincts” as an innate drive to care for one’s offspring. However, we know little of the role cognition play in this instinct. Researchers at the University of Toronto examined the impact of the sound of a baby’s cries on performance during a cognitive task. The study was discussed in a recent article in Neuroscience Stuff:

“Parental instinct appears to be hardwired, yet no one talks about how this instinct might include cognition,” says David Haley, co-author and Associate Professor of psychology at U of T Scarborough.

“If we simply had an automatic response every time a baby started crying, how would we think about competing concerns in the environment or how best to respond to a baby’s distress?”

The study looked at the effect infant vocalizations—in this case audio clips of a baby laughing or crying—had on adults completing a cognitive conflict task. The researchers used the Stroop task, in which participants were asked to rapidly identify the color of a printed word while ignoring the meaning of the word itself. Brain activity was measured using electroencephalography (EEG) during each trial of the cognitive task, which took place immediately after a two-second audio clip of an infant vocalization.

The brain data revealed that the infant cries reduced attention to the task and triggered greater cognitive conflict processing than the infant laughs. Cognitive conflict processing is important because it controls attention—one of the most basic executive functions needed to complete a task or make a decision, notes Haley, who runs U of T’s Parent-Infant Research Lab.

“Parents are constantly making a variety of everyday decisions and have competing demands on their attention,” says Joanna Dudek, a graduate student in Haley’s Parent-Infant Research Lab and the lead author of the study.

“They may be in the middle of doing chores when the doorbell rings and their child starts to cry. How do they stay calm, cool and collected, and how do they know when to drop what they’re doing and pick up the child?”

A baby’s cry has been shown to cause aversion in adults, but it could also create an adaptive response by “switching on” the cognitive control parents use in effectively responding to their child’s emotional needs while also addressing other demands in everyday life, adds Haley.

“If an infant’s cry activates cognitive conflict in the brain, it could also be teaching parents how to focus their attention more selectively,” he says.

“It’s this cognitive flexibility that allows parents to rapidly switch between responding to their baby’s distress and other competing demands in their lives—which, paradoxically, may mean ignoring the infant momentarily.”

The findings add to a growing body of research suggesting that infants occupy a privileged status in our neurobiological programming, one deeply rooted in our evolutionary past. But, as Haley notes, it also reveals an important adaptive cognitive function in the human brain.

Read the original article here

- Comments (0)

- Hypoconnectivity Found In Brains Of Those With Intermittent Explosive Disorder

- By Jason von Stietz, M.A.

- July 15, 2016

-

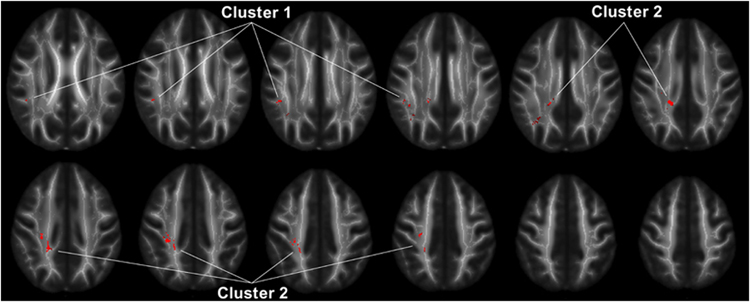

Photo Credit: Lee et al., Neuropsychopharmacology Why do those with anger issues tend to misunderstand social situations? Researchers at the University of Chicago Medical School compared the brains of those suffering from intermittent explosive disorder (IED) to the brains of healthy controls using diffusion tensor imaging. Findings indicated that brains of people with IED showed less white matter connecting the frontal cortex to the parietal lobes. The study was discussed in a recent article in Medical Xpress:

In a new study published in the journal Neuropsychopharmacology, neuroscientists from the University of Chicago show that white matter in a region of the brain called the superior longitudinal fasciculus (SLF) has less integrity and density in people with IED than in healthy individuals and those with other psychiatric disorders. The SLF connects the brain's frontal lobe—responsible for decision-making, emotion and understanding consequences of actions—with the parietal lobe, which processes language and sensory input.

"It's like an information superhighway connecting the frontal cortex to the parietal lobes," said Royce Lee, MD, associate professor of psychiatry and behavioral neuroscience at the University of Chicago and lead author of the study. "We think that points to social cognition as an important area to think about for people with anger problems."

Lee and his colleagues, including senior author Emil Coccaro, MD, Ellen C. Manning Professor and Chair of Psychiatry and Behavioral Neuroscience at UChicago, used diffusion tensor imaging, a form ofmagnetic resonance imaging (MRI) that measures the volume and density of white matter connective tissue in the brain. Connectivity is a critical issue because the brains of people with psychiatric disorders usually show very few physical differences from healthy individuals.

"It's not so much how the brain is structured, but the way these regions are connected to each other," Lee said. "That might be where we're going to see a lot of the problems in psychiatric disorders, so white matter is a natural place to start since that's the brain's natural wiring from one region to another."

People with anger issues tend to misunderstand the intentions of other people in social situations. They think others are being hostile when they are not and make the wrong conclusions about their intentions. They also don't take in all the data from a social interaction, such as body language or certain words, and notice only those things that reinforce their belief that the other person is challenging them.

Decreased connectivity between regions of the brain that process a social situation could lead to the impaired judgment that escalates to an explosive outburst of anger. The discovery of connectivity deficits in a specific region of the brain like the SLF provides an important starting point for more research on people with IED, as well as those with borderline personality disorder, who share similar social and emotional problems and appear to have the same abnormality in the SLF.

"This is another example of tangible deficits in the brains of those with IED that indicate that impulsive aggressive behavior is not simply 'bad behavior' but behavior with a real biological basis that can be studied and treated," Coccaro said.

Read the original article Here

- Comments (0)

Subscribe to our Feed via RSS

Subscribe to our Feed via RSS