Blog

- Being Only Child Affects Brain Structure And Personality

- By Jason von Stietz, M.A.

- May 30, 2017

-

Getty Images It has long been hypothesized that individuals raised as only children develop a certain type of personality. However, does being an only child influence the structural development of an individual’s brain? Researchers at Southwest University in China investigated the relationship between the MRI scans and personalities of 303 college-aged participants. Findings indicated that only children were more likely to demonstrate greater flexibility in thinking and more grey matter volume in the supramarginal gyrus, which is associated with language perception and processing. The study was discussed in a recent article in Science Alert:

The mix of young people in China offers a broad pool of candidates for this area of research, owing to the nation's long-lasting one-child policy, which limited many but not all families to only raising a single child in between 1979 and 2015.

The common stereotype about being an only child is that growing up without siblings influences an individual's behaviour and personality traits, making them more selfish and less likely to share with their peers.

Previous research has borne some of this conventional wisdom out - but also demonstrated that only children can receive cognitive benefits as a result of their solo upbringing.

The participants in this latest study were approximately half only children (and half children with siblings), and were given cognitive tests designed to measure their intelligence, creativity, and personality, in addition to scanning their brains with MRI machines.

Although the results didn't demonstrate any difference in terms of intelligence between the two groups, they did reveal that only children exhibited greater flexibility in their thinking - a key marker of creativity per the Torrance Tests of Creative Thinking.

While only children showed greater flexibility, they also demonstrated less agreeableness in personality tests under what's called the Revised NEO Personality Inventory. Agreeableness is one of the five chief measures tested under the system, with the other four being extraversion, conscientiousness, neuroticism, and openness to experience.

But more importantly than the behavioural data - which have been the focus of many other studies - the MRI results actually demonstrated neurological differences in the participants' grey matter volume (GMV) as a result of their upbringing.

In particular, the results showed that only children showed greater supramarginal gyrusvolumes - a portion of the parietal lobe thought to be associated with language perception and processing, and which in the study correlated to the only children's greater flexibility.

By contrast, the brains of only children revealed less volume in other areas, including the medial prefrontal cortex (mPFC) - associated with emotional regulation, such as personality and social behaviours - which the team found to be correlated with their lower scores on agreeableness.

While the researchers aren't drawing firm conclusions on why only children exhibit these differences, they suggest it's possible that parents may foster greater creativity in only children by devoting more time to them - and possibly placing greater expectations on them.

Meanwhile, they hypothesise that only children's lesser agreeableness could result from excessive attention from family members, less exposure to external social groups, and more focus on solitary activities while growing up.

It's important to note that there are some limitations to the study - first off, all the participants were highly educated young people taken from a specific part of the world, and the results only reflect testing from one point in time.

That said, the researchers say it's the first evidence that differences in the anatomical structures of the brain are linked to differing behaviour in terms of flexibility and agreeableness.

"Additionally, our results contribute to the understanding of the neuroanatomical basis of the differences in cognitive function and personality between only-children and non-only-children," the authors write in their study.

While there's still a lot we don't understand about what's going on here, it's clear that there's a link between our family environments and the way our brain structure develops, and it'll be fascinating to see where this direction of research takes us in the future.

Read the original article Here

- Comments (0)

- Bipolar Disorder and the Brain

- By Jason von Stietz, M.A.

- May 18, 2017

-

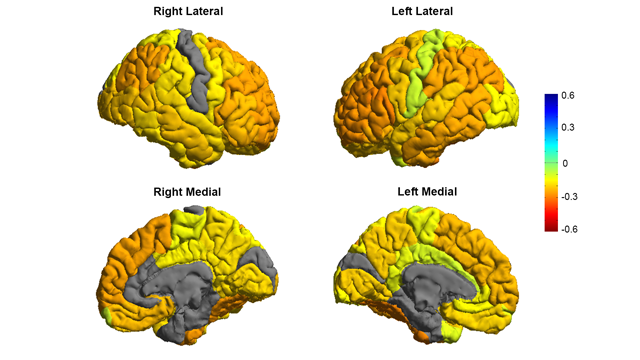

Image courtesy of the ENIGMA Bipolar Consortium/Derrek Hibar et al. Is the brain of someone with bipolar disorder different than the brain of their healthy counterpart? A study led by researchers from University of Southern California used MRI scans to compare the brains of individuals with bipolar disorder to the brains of individuals in a healthy control group. They found that the brains of people with bipolar disorder often had reductions in grey matter in frontal regions associated with self-control. The study was discussed in a recent article in Neuroscience for Technology Networks:

In the largest MRI study to date on patients with bipolar disorder, a global consortium published new research showing that people with the condition have differences in the brain regions that control inhibition and emotion.

By revealing clear and consistent alterations in key brain regions, the findings published in Molecular Psychiatry on May 2 offer insight to the underlying mechanisms of bipolar disorder.

"We created the first global map of bipolar disorder and how it affects the brain, resolving years of uncertainty on how people's brains differ when they have this severe illness," said Ole A. Andreassen, senior author of the study and a professor at the Norwegian Centre for Mental Disorders Research at the University of Oslo.

Bipolar disorder affects about 60 million people worldwide, according to the World Health Organization. It is a debilitating psychiatric disorder with serious implications for those affected and their families. However, scientists have struggled to pinpoint neurobiological mechanisms of the disorder, partly due to the lack of sufficient brain scans.

The study was part of an international consortium led by the USC Stevens Neuroimaging and Informatics Institute at the Keck School of Medicine of USC: ENIGMA (Enhancing Neuro Imaging Genetics Through Meta Analysis) spans 76 centers and includes 26 different research groups around the world.

Thousands of MRI scans

The researchers measured the MRI scans of 6,503 individuals, including 2,447 adults with bipolar disorder and 4,056 healthy controls. They also examined the effects of commonly used prescription medications, age of illness onset, history of psychosis, mood state, age and sex differences on cortical regions.

The study showed thinning of gray matter in the brains of patients with bipolar disorder when compared with healthy controls. The greatest deficits were found in parts of the brain that control inhibition and motivation - the frontal and temporal regions.

Some of the bipolar disorder patients with a history of psychosis showed greater deficits in the brain's gray matter. The findings also showed different brain signatures in patients who took lithium, anti-psychotics and anti-epileptic treatments. Lithium treatment was associated with less thinning of gray matter, which suggests a protective effect of this medication on the brain.

"These are important clues as to where to look in the brain for therapeutic effects of these drugs," said Derrek Hibar, first author of the paper and a professor at the USC Stevens Neuroimaging and Informatics Institute when the study was conducted. He was a former visiting researcher at the University of Oslo and is now a senior scientist at Janssen Research and Development, LLC.

Early detection

Future research will test how well different medications and treatments can shift or modify these brain measures as well as improve symptoms and clinical outcomes for patients.

Mapping the affected brain regions is also important for early detection and prevention, said Paul Thompson, director of the ENIGMA consortium and co-author of the study.

"This new map of the bipolar brain gives us a roadmap of where to look for treatment effects," said Thompson, an associate director of the USC Stevens Neuroimaging and Informatics Institute at the Keck School of Medicine. "By bringing together psychiatrists worldwide, we now have a new source of power to discover treatments that improve patients' lives."

This article has been republished from materials provided by University of Southern California. Note: material may have been edited for length and content. For further information, please contact the cited source.Read the original article Here

- Comments (0)

- Earlier Lifestyle Factors Relate to Cognitive Health in Later Life

- By Jason von Stietz, M.A.

- April 30, 2017

-

Getty Images Is there a secret to being mentally fit in old age? Will what you do in your youth and middle years impact your brain in older age? Researchers examined the relationship between lifestyle factors such as diet, physical activity, alcohol consumption, smoking, and social and cognitive activity on cognitive functioning in older age. Findings indicated that earlier life health and activity may lead to greater cognitive health in later life. The study was discussed in recent article in Medical Xpress:

The large-scale investigation published in the journal PLOS Medicine and led by the University of Exeter, used data from more than 2,000 mentally fit people over the age of 65, examined the theory that experiences in early or mid life which challenge the brain make people more resilient to changes resulting from age or illness – they have higher "cognitive reserve".

The analysis, funded by the Economic and Social Research Council (ESRC) found that people with higher levels of reserve are more likely to stay mentally fit for longer, making the brain more resilient to illnesses such as dementia.

The research team included collaborators from the universities of Bangor, Newcastle and Cambridge.

Linda Clare, Professor of Clinical Psychology of Ageing and Dementia at the University of Exeter, said: "Losing mental ability is not inevitable in later life. We know that we can all take action to increase our chances of maintaining our own mental health, through healthy living and engaging in stimulating activities. It's important that we understand how and why this occurs, so we can give people meaningful and effective measures to take control of living full and active lives into older age.

"People who engage in stimulating activity which stretches the brain, challenging it to use different strategies that exercise a variety of networks, have higher 'Cognitive reserve'. This builds a buffer in the brain, making it more resilient. It means signs of decline only become evident at a higher threshold of illness or decay than when this buffer is absent."

The research team analysed data from 2,315 mentally fit participants aged over 65 years who took part in the first wave of interviews for the Cognitive Function and Ageing Study Wales (CFAS-Wales).

They analysed whether a healthy lifestyle was associated with better performance on a mental ability test. They found that a healthy diet, more physical activity, more social and mentally stimulating activity and moderate alcohol consumption all seemed to boost cognitive performance.

Professor Bob Woods of Bangor University, who leads the CFAS Wales study, said: "We found that people with a healthier lifestyle had better scores on tests of mental ability, and this was partly accounted for by their level of cognitive reserve.

"Our results highlight the important of policies and measures that encourage older people to make changes in their diet, exercise more, and engage in more socially oriented and mentally stimulating activities."

Professor Fiona Matthews of Newcastle University, who is principal statistician on the CFAS studies, said "Many of the factors found here to be important are not only healthy for our brain, but also help at younger age avoiding heart disease."

Read the original article Here

- Comments (0)

- tDCS Increases Honest Behavior

- By Jason von Stietz, M.A.

- April 21, 2017

-

Getty Images Can honesty be strengthened like a muscle? Researchers at University of Zurich led a study examining the relationship between honesty and non-invasive brain stimulation known as transcranial direct current stimulation (tDCS). Findings indicated that tDCS applied over the right dorsolateral prefrontal cortex increased honesty in situations in which it is tempting to cheat for personal gain. The study was discussed in a recent article in MedicalXpress:

Honesty plays a key role in social and economic life. Without honesty, promises are not kept, contracts are not enforced, taxes remain unpaid. Despite the importance of honesty for society, its biological basis remains poorly understood. Researchers at the University of Zurich, together with colleagues from Chicago and Boston, now show that honest behavior can be increased by means of non-invasive brain stimulation. The results of their research highlight a deliberation process between honesty and self-interest in the right dorsolateral prefrontal cortex (rDLPFC).

Occasional lies for material self interest

In their die-rolling experiment, the participants could increase their earnings by cheating rather than telling the truth (see box below). The researchers found that people cheated a significant amount of the time. However, many participants also stuck to the truth. "Most people seem to weigh motives of self-interest against honesty on a case-by-case basis; they cheat a little but not on every possible occasion." explains Michel Maréchal, UZH Professor for Experimental Economics. However, about 8% of the participants cheated in whenever possible and maximized their profit.

Less lies through brain stimulation

The researchers applied transcranial direct current stimulation over a region in the right dorsolateral prefrontal cortex (rDLPFC). This noninvasive brain stimulation method makes brain cells more sensitive i.e., they are more likely to be active. When the researchers applied this stimulation during the task, participants were less likely to cheat. However, the number of consistent cheaters remained the same. Christian Ruff, UZH Professor of Neuroeconomics, points out "This finding suggests that the stimulation mainly reduced cheating in participants who actually experienced a moral conflict, but did not influence the decision making process in those not in those who were committed to maximizing their earnings".

Conflict between money and morals

The researchers found that the stimulation only affected the process of weighing up material versus moral motives. They found no effects for other types of conflict that do not involve moral concerns (i.e., financial decisions involving risk, ambiguity, and delayed rewards). Similarly, an additional experiment showed that the stimulation did not affect honest behavior when cheating led to a payoff for another person instead of oneself and the conflict was therefore between two moral motives. The pattern of results suggests that the stimulated neurobiological process specifically resolves trade-offs between material self-interest and honesty.

Developing an understanding of the biological basis of behavior

According to the researchers, these findings are an important first step in identifying the brain processes that allow people to behave honestly. "These brain processes could lie at the heart of individual differences and possibly pathologies of honest behavior", explains Christian Ruff. And finally, the new results raise the question to what degree honest behavior is based on biological predispositions, which may be crucial for jurisdiction. Michel Maréchal summarizes: "If breaches of honesty indeed represent an organic condition, our results question to what extent people can be made fully liable for their wrongdoings."

Read the original article Here - Comments (0)

- Can Fast fMRI Monitor Brain Activity of Thoughts?

- By Jason von Stietz, M.A.

- March 30, 2017

-

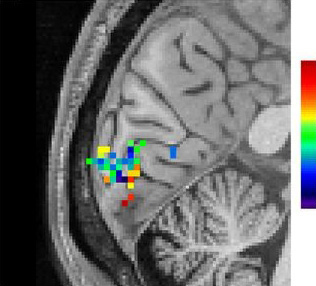

Photo Credit: Lewis et al Can fMRI detect human thought? Researchers from Harvard utilized recent developments in fast fMRI techniques to examine rapid oscillations of brain activity during human thought. Researchers showed participants rapidly oscillating images and monitored the rapid oscillations of brain activity in the visual cortex. These findings ark the first steps towards better studying the neural networks as they produce human thought. The study was discussed funded by the National Institutes for Health and discussed in a recent press release:

By significantly increasing the speed of functional MRI (fMRI), NIBIB-funded researchers have been able to image rapidly fluctuating brain activity during human thought. fMRI measures changes in blood oxygenation, which were previously thought to be too slow to detect the subtle neuronal activity associated with higher order brain functions. The new discovery that fast fMRI can detect rapid brain oscillations is a significant step towards realizing a central goal of neuroscience research: mapping the brain networks responsible for human cognitive functions such as perception, attention, and awareness.

“A critical aim of the President’s BRAIN Initiative1 is to move neuroscience into a new realm where we can identify and track functioning neural networks non-invasively,” explains Guoying Liu, Ph.D., Director of the NIBIB program in Magnetic Resonance Imaging. “This work demonstrates the potential of fMRI for mapping healthy neural networks as well as those that may contribute to neurological diseases such as dementia and other mental health disorders, which are significant national and global health problems.”

fMRI works by detecting local increases in oxygen as blood is delivered to a working part of the brain. The technique has been instrumental for identifying which areas in the brain control functions such as vision, hearing, or touch. However, standard fMRI can only detect the blood flow coming to replenish an area of the brain several seconds after it has performed a function. It was generally accepted that this was the limit of what could be detected by fMRI—identification of a region in the brain that had responded to a large stimulus, such as a continuous 30 second “blast” of bright light.

Combining several new techniques, Jonathan R. Polimeni, Ph.D., senior author of the study, and his colleagues at Harvard’s Athinoula A. Martinos Center for Biomedical Imaging, applied fast fMRI in an effort to track neuronal networks that control human thought processes, and found that they could now measure rapidly oscillating brain activity. The results of this groundbreaking work are reported in the October 2016 issue of the Proceedings of the National Academy of Sciences.2

The researchers used fast fMRI in human volunteers observing a rapidly fluctuating checkerboard pattern. The fast fMRI was able to detect the subtle and very rapid oscillations in cerebral blood flow in the brain’s visual cortex as the volunteers observed the changing pattern.

“The oscillating checkerboard pattern is a more “naturalistic” stimulus, in that its timing is similar to the very subtle neural oscillations made during normal thought processes,” explains Polimeni. “The fast fMRI detects the induced neural oscillations that allow the brain to understand what the eye is observing --- the changing checkerboard pattern. These subtle oscillations were completely undetectable with standard fMRI. This exciting result opens the possibility of using fast fMRI to image neural networks as they guide the process of human thought.”

One such possibility is suggested by first author of the study Laura D. Lewis, Ph.D. “This technique now gives us a method for obtaining much more detailed information about the complex brain activity that takes place during sleep, as well as other dynamic switches in brain states, such as when under anesthesia and during hallucinations.”

Concludes Polimeni, “It had always been thought that fMRI had the potential to play a major role in these types of studies. Meaningful progress in cognitive neuroscience depends on mapping patterns of brain activity, which are constantly and rapidly changing with every experience we have. Thus, we are extremely excited to see our work contribute significantly to achieving this goal.”

Read the original article Here

- Comments (2)

- Interhemispheric Connectivity Related to Creativity

- By Jason von Stietz, M.A.

- March 23, 2017

-

Shutterstock Popular media often suggests that the “right brain” is more associated with creativity and artistic ability. Researchers at Duke University and University of Padova examined the relationship diffusor tensor imaging brain scans and measures of creativity in 68 participants. Findings indicated that greater interhemispheric connectivity related to higher creativity. The study was discussed in a recent article in Medical Xpress:

For the study, statisticians David Dunson of Duke University and Daniele Durante of the University of Padova analyzed the network of white matter connections among 68 separate brain regions in healthy college-age volunteers.

The brain's white matter lies underneath the outer grey matter. It is composed of bundles of wires, or axons, which connect billions of neurons and carry electrical signals between them.

A team led by neuroscientist Rex Jung of the University of New Mexico collected the data using an MRI technique called diffusion tensor imaging, which allows researchers to peer through the skull of a living person and trace the paths of all the axons by following the movement of water along them. Computers then comb through each of the 1-gigabyte scans and convert them to three-dimensional maps—wiring diagrams of the brain.

Jung's team used a combination of tests to assess creativity. Some were measures of a type of problem-solving called "divergent thinking," or the ability to come up with many answers to a question. They asked people to draw as many geometric designs as they could in five minutes. They also asked people to list as many new uses as they could for everyday objects, such as a brick or a paper clip. The participants also filled out a questionnaire about their achievements in ten areas, including the visual arts, music, creative writing, dance, cooking and science.

The responses were used to calculate a composite creativity score for each person.

Dunson and Durante trained computers to sift through the data and identify differences in brain structure.

They found no statistical differences in connectivity within hemispheres, or between men and women. But when they compared people who scored in the top 15 percent on the creativity tests with those in the bottom 15 percent, high-scoring people had significantly more connections between the right and left hemispheres.

The differences were mainly in the brain's frontal lobe.

Dunson said their approach could also be used to predict the probability that a person will be highly creative simply based on his or her brain network structure. "Maybe by scanning a person's brain we could tell what they're likely to be good at," Dunson said.

The study is part of a decade-old field, connectomics, which uses network science to understand the brain. Instead of focusing on specific brain regions in isolation, connectomics researchers use advanced brain imaging techniques to identify and map the rich, dense web of links between them.

Dunson and colleagues are now developing statistical methods to find out whether brain connectivity varies with I.Q., whose relationship to creativity is a subject of ongoing debate.

In collaboration with neurology professor Paul Thompson at the University of Southern California, they're also using their methods for early detection of Alzheimer's disease, to help distinguish it from normal aging.

By studying the patterns of interconnections in healthy and diseased brains, they and other researchers also hope to better understand dementia, epilepsy, schizophrenia and other neurological conditions such as traumatic brain injury or coma.

"Data sharing in neuroscience is increasingly more common as compared to only five years ago," said Joshua Vogelstein of Johns Hopkins University, who founded the Open Connectome Project and processed the raw data for the study.

Just making sense of the enormous datasets produced by brain imaging studies is a challenge, Dunson said.

Most statistical methods for analyzing brain network data focus on estimating properties of single brains, such as which regions serve as highly connected hubs. But each person's brain is wired differently, and techniques for identifying similarities and differences in connectivity across individuals and between groups have lagged behind.

The study appears online and will be published in a forthcoming issue of the journal Bayesian Analysis.

Read the original article Here

- Comments (0)

- Underestimating the Value Of Being in Someone Else's Shoes

- By Jason von Stietz, M.A.

- March 19, 2017

-

Getty Images Do we need to walk a mile in people’s shoes to understand them? Although people are often confident in their understanding of other’s emotions, recent research found that individual’s often overestimate the accuracy of their understanding. Furthermore, when individuals simulate the experiences of others and infer their emotional response they are significantly more accurate. The study was discussed in a recent article in Medical Xpress:

We tend to believe that people telegraph how they're feeling through facial expressions and body language and we only need to watch them to know what they're experiencing—but new research shows we'd get a much better idea if we put ourselves in their shoes instead. The findings are published in Psychological Science, a journal of the Association for Psychological Science.

"People expected that they could infer another's emotions by watching him or her, when in fact they were more accurate when they were actually in the same situation as the other person. And this bias persisted even after our participants gained firsthand experience with both strategies," explain study authors Haotian Zhou (Shanghai Tech University) and Nicholas Epley (University of Chicago).

To explore out how we go about understanding others' minds, Zhou, Epley, and co-author Elizabeth Majka (Elmhurst College) decided to focus on two potential mechanisms: theorization and simulation. When we theorize about someone's experience, we observe their actions and make inferences based on our observations. When we simulate someone's experience, we use our own experience of the same situation as a guide.

Based on previous research showing that people tend to assume that our feelings 'leak out' through our behavior, Zhou, Epley, and Majka hypothesized that people would overestimate the usefulness of theorizing about another person's experience. And given that we tend to think that individual experiences are unique, the researchers also hypothesized that people would underestimate the usefulness of simulating another person's experience.

In one experiment, the researchers asked 12 participants to look at a series of 50 pictures that varied widely in emotional content, from very negative to positive. A webcam recorded their faces as these "experiencers" rated their emotional feelings for each picture. The researchers then brought in a separate group of 73 participants and asked them to predict the experiencers' ratings for each picture. Some of these "predictors" simulated the experience, looking at each picture; others theorized about the experience, looking at the webcam recording of the experiencer; and a third group were able to simulate and theorize at the same time, looking at both the picture and accompanying recording.

The results revealed that the predictors were much more accurate when they saw the pictures just as the experiencer had than they were when they saw the recording of the experiencer's face. Interestingly, seeing both the picture and the recording simultaneously yielded no additional benefit—being able to simulate the experience seemed to underlie participants' accuracy.

Despite this, people didn't seem to appreciate the benefit of simulation. In a second experiment, only about half of the predictors who were allowed to choose a strategy opted to use simulation. As before, predictors who simulated the rating experience were much more accurate in predicting the experiencer's feelings, regardless of whether they chose that strategy or were assigned to it.

In a third experiment, the researchers allowed for dynamic choice, assuming that predictors may increase in accuracy over time if they were able to choose their strategy before each trial. The results showed, once again, that simulation was the better strategy across the board—still, participants who had the ability to choose opted to simulate only about 48% of the time.

A fourth experiment revealed that simulation was the better strategy even when experiencers had been told to make their reactions as expressive and "readable' as possible.

"Our most surprising finding was that people committed the same mistakes when trying to understand themselves," Zhou and Epley note.

Participants in a fifth experiment expected they would be more accurate if they got to watch the expressions they had made while looking at emotional pictures one month earlier—but the findings showed they were actually better at estimating how they had felt if they simply viewed the pictures again.

"They dramatically overestimated how much their own face would reveal, and underestimated the accuracy they would glean from being in their own past shoes again," the researchers explain.

Although reading other people's mental states is an essential part of everyday life, these experiments show that we don't always pick the best strategy for the task.

According to Zhou and Epley, these findings help to shed light on the tactics that people use to understand each other.

"Only by understanding why our inferences about each other sometimes go astray can we learn how to understand each other better," the researchers conclude.

Read the original article Here

- Comments (0)

- Brain Scans May Predict Adolescent Drug Use

- By Jason von Stietz, M.A.

- February 26, 2017

-

Getty Images Is it possible to predict who will face significant drug problems in their adolescence? Some adolescents battle with addictions broadcast all the warning signs whereas others appear to show no warnings at all. In an effort to predict drug use, researchers examined the relationship between brain scans, novelty seeking, and future drug use. The study was discussed in a recent article in Medical Xpress:

There's an idea out there of what a drug-addled teen is supposed to look like: impulsive, unconscientious, smart, perhaps – but not the most engaged. While personality traits like that could signal danger, not every adolescent who fits that description becomes a problem drug user. So how do you tell who's who?

There's no perfect answer, but researchers report February 21 in Nature Communications that they've found a way to improve our predictions – using brain scans that can tell, in a manner of speaking, who's bored by the promise of easy money, even when the kids themselves might not realize it.

That conclusion grew out of a collaboration between Brian Knutson, a professor of psychology at Stanford, and Christian Büchel, a professor of medicine at Universitätsklinikum Hamburg Eppendorf. With support from the Stanford Neurosciences Institute's NeuroChoice program, which Knutson co-directs, the pair started sorting through an intriguing dataset covering, among other things, 144 European adolescents who scored high on a test of what's called novelty seeking – roughly, the sorts of personality traits that might indicate a kid is at risk for drug or alcohol abuse.

Novelty seeking in a brain scanner

Novelty seeking isn't inherently bad, Knutson said. On a good day, the urge to take a risk on something new can drive innovation. On a bad day, however, it can lead people to drive recklessly, jump off cliffs and ingest whatever someone hands out at a party. And psychologists know that kids who score high on tests of novelty seeking are on average a bit more likely to abuse drugs. The question was, could there be a better test, one both more precise and more individualized, that could tell whether novelty seeking might turn into something more destructive.

Knutson and Büchel thought so, and they suspected that a brain-scanning test called the Monetary Incentive Delay Task, or MID, could be the answer. Knutson had developed the task early in his career as a way of targeting a part of the brain now known to play a role in mentally processing rewards like money or the high of a drug.

The task works like this. People lie down in an MRI brain scanner to play a simple video game for points, which they can eventually convert to money. More important than the details of the game, however, is this: At the start of each round, each player gets a cue about how many points he stands to win during the round. It's at that point that players start to anticipate future rewards. For most people, that anticipation alone is enough to kick the brain's reward centers into gear.

A puzzle and the data to solve it

This plays out differently – and a little puzzlingly – in adolescents who use drugs. Kids' brains in general respond less when anticipating rewards, compared with adults' brains. But that effect is even more pronounced when those kids use drugs, which suggests one of two things: Either drugs suppress brain activity, or the suppressed brain activity somehow leads youths to take drugs.

If it's the latter, then Knutson's task could predict future drug use. But no one was sure, mainly because no one had measured brain activity in non-drug-using adolescents and compared it to eventual drug use.

No one, that is, except Büchel. As part of the IMAGEN consortium, he and colleagues in Europe had already collected data on around 1,000 14-year-olds as they went through Knutson's MID task. They had also followed up with each of them two years later to find out if they'd become problem drug users – for example, if they smoked or drank on a daily basis or ever used harder drugs like heroin. Then, Knutson and Büchel focused their attention on 144 adolescents who hadn't developed drug problems by age 14 but had scored in the top 25 percent on a test of novelty seeking.

Lower anticipation

Analyzing that data, Knutson and Büchel found they could correctly predict whether youngsters would go on to abuse drugs about two-thirds of the time based on how their brains responded to anticipating rewards. This is a substantial improvement over behavioral and personality measures, which correctly distinguished future drug abusers from other novelty-seeking 14-year-olds about 55 percent of the time, only a little better than chance.

"This is just a first step toward something more useful," Knutson said. "Ultimately the goal – and maybe this is pie in the sky – is to do clinical diagnosis on individual patients" in the hope that doctors could stop drug abuse before it starts, he said.

Knutson said the study first needs to be replicated, and he hopes to follow the kids to see how they do further down the line. Eventually, he said, he may be able not just to predict drug abuse, but also better understand it. "My hope is the signal isn't just predictive, but also informative with respect to interventions."

Read the original article Here

- Comments (0)

- Gist Reasoning and Traumatic Brain Injury

- By Jason von Stietz, M.A.

- February 24, 2017

-

BigStock The ability to understand the “gist” of things is an important and likely underappreciated skill. Gist reasoning, or the ability to look at complex, concrete information and make deeper level abstract interpretations is an essential part of each person’s daily activities. Researchers at Texas Woman’s University and University of Texas Dallas studied the relationship between gist reasoning and cognitive deficits in individual’s with traumatic brain injury. The study was discussed in a recent article in Medical Xpress:

The study, published in Journal of Applied Biobehavioral Research, suggests the gist reasoning test may be sensitive enough to help doctors and clinicians identify previously undiagnosed cognitive changes that could explain the daily life difficulties experienced by TBI patients and subsequently guide appropriate therapies.

The gist reasoning measure, called the Test of Strategic Learning, accurately identified 84.7 percent of chronic TBI cases, a much higher rate than more traditional tests that accurately identified TBI between 42.3 percent and 67.5 percent of the time.

"Being able to 'get the gist' is essential for many day-to-day activities such as engaging in conversation, understanding meanings that are implied but not explicitly stated, creating shopping lists and resolving conflicts with others," said study lead author Dr. Asha Vas of Texas Woman's University who was a postdoctoral fellow at the Center for BrainHealth at the time of the study. "The gist test requires multiple cognitive functions to work together."

The study featured 70 participants ages 18 to 55, including 30 who had experienced a moderate to severe chronic traumatic brain injury at least one year ago. All the participants had similar socioeconomic status, educational backgrounds and IQ.

Researchers were blinded to the participant's TBI status while administering four different tests that measure abstract thinking—the ability to understand the big picture, not just recount the details of a story or other complex information. Researchers used the results to predict which participants were in the TBI group and which were healthy controls.

During the cognitive tests, the majority of the TBI group easily recognized abstract or concrete information when given prompts in a yes-no format. But the TBI group performed much worse than controls on tests, including gist reasoning, that required deeper level processing of information with fewer or no prompts.

The gist reasoning test consists of three texts that vary in length (from 291 to 575 words) and complexity. The test requires the participant to provide a synopsis of each of the three texts.

Vas provided an example of what "getting the gist" means using Shakespeare's play Romeo and Juliet.

"There are no right or wrong answers. The test relies on your ability to derive meaning from important story details and arrive at a high-level summary: Two young lovers from rival families scheme to build a life together and it ends tragically. You integrate existing knowledge, such as the concept of love and sacrifice, to create a meaning from your perspective. Perhaps, in this case, 'true love does not conquer all,'" she said.

Past studies have shown that higher scores on the gist reasoning test in individuals in chronic phases of TBI correlate to better ability to perform daily life functions.

"Perhaps, in the future, the gist reasoning test could be used as a tool to identify other cognitive impairments," said Dr. Jeffrey Spence, study co-author and director of biostatistics at the Center for BrainHealth. "It may also have the potential to be used as a marker of cognitive changes in aging."

Read the original article Here

- Comments (0)

- Long-Term Effects of TBI Studied

- By Jason von Stietz, M.A.

- February 19, 2017

-

Getty Images The long-term effects of traumatic brain injury (TBI) are just now beginning to be understood. Researchers from Cincinnati Children’s Hospital Medical Center examined the impact of TBI on pediatric patients about seven years after the injury. The findings indicated that children who have suffered a mild to moderate TBI are twice as likely to have issues with attention than their healthy counterparts. The study was discussed in a recent issue in Medical Xpress:

In a study to be presented Friday Feb. 10 at the annual meeting of the Association of Academic Physiatrists in Las Vegas, researchers from Cincinnati Children's will present research on long-term effects of TBI—an average of seven years after injury. Patients with mild to moderate brain injuries are two times more likely to have developed attention problems, and those with severe injuries are five times more likely to develop secondary ADHD. These researchers are also finding that the family environment influences the development of these attention problems.

- Parenting and the home environment exert a powerful influence on recovery. Children with severe TBI in optimal environments may show few effects of their injuries while children with milder injuries from disadvantaged or chaotic homes often demonstrate persistent problems.

- Early family response may be particularly important for long-term outcomes suggesting that working to promote effective parenting may be an important early intervention.

- Certain skills that can affect social functioning, such as speed of information processing, inhibition, and reasoning, show greater long-term effects.

- Many children do very well long-term after brain injury and most do not have across the board deficits.

More than 630,000 children and teenagers in the United States are treated in emergency rooms for TBI each year. But predictors of recovery following TBI, particularly the roles of genes and environment, are unclear. These environmental factors include family functioning, parenting practices, home environment, and socioeconomic status. Researchers at Cincinnati Children's are working to identify genes important to recovery after TBI and understand how these genes may interact with environmental factors to influence recovery.

- They will be collecting salivary DNA samples from more than 330 children participating in the Approaches and Decisions in Acute Pediatric TBI Trial.

- The primary outcome will be global functioning at 3, 6, and 12 months post injury, and secondary outcomes will include a comprehensive assessment of cognitive and behavioral functioning at 12 months post injury.

- This project will provide information to inform individualized prognosis and treatment plans.

Using neuroimaging and other technologies, scientists are also learning more about brain structure and connectivity related to persistent symptoms after TBI. In a not-yet-published Cincinnati Children's study, for example, researchers investigated the structural connectivity of brain networks following aerobic training. The recovery of structural connectivity they discovered suggests that aerobic training may lead to improvement in symptoms.

Over the past two decades, investigators at Cincinnati Children's have conducted a series of studies to develop and test interventions to improve cognitive and behavioral outcomes following pediatric brain injury. They developed an innovative web-based program that provides family-centered training in problem-solving, communication, and self-regulation.

- Across a series of randomized trials, online family problem-solving treatment has been shown to reduce behavior problems and executive dysfunction (management of cognitive processes) in older children with TBI, and over the longer-term improved everyday functioning in 12-17 year olds.

- Web-based parenting skills programs targeting younger children have resulted in improved parent-child interactions and reduced behavior problems. In a computerized pilot trial of attention and memory, children had improvements in sustained attention and parent-reported executive function behaviors. These intervention studies suggest several avenues for working to improve short- and long-term recovery following TBI.

Read the original article Here

- Comments (0)

Subscribe to our Feed via RSS

Subscribe to our Feed via RSS