Blog

- Expressive Writing "Cools" The Worried Brain

- By Jason von Stietz, M.A.

- September 22, 2017

-

Getty Images It is often recommended that people write about their feelings. However, does writing calm a worried brain? Researchers at Michigan State University found that expressive writing frees cognitive resources and improves performance on a cognitive task. The study was discussed in a recent article in MedicalXpress:

The research, funded by the National Science Foundation and National Institutes of Health, provides the first neural evidence for the benefits of expressive writing, said lead author Hans Schroder, an MSU doctoral student in psychology and a clinical intern at Harvard Medical School's McLean Hospital.

"Worrying takes up cognitive resources; it's kind of like people who struggle with worry are constantly multitasking – they are doing one task and trying to monitor and suppress their worries at the same time," Schroder said. "Our findings show that if you get these worries out of your head through expressive writing, those cognitive resources are freed up to work toward the task you're completing and you become more efficient."

Schroder conducted the study at Michigan State with Jason Moser, associate professor of psychology and director of MSU's Clinical Psychophysiology Lab, and Tim Moran, a Spartan graduate who's now a research scientist at Emory University. The findings are published online in the journal Psychophysiology.

For the study, college students identified as chronically anxious through a validated screening measure completed a computer-based "flanker task" that measured their response accuracy and reaction times. Before the task, about half of the participants wrote about their deepest thoughts and feelings about the upcoming task for eight minutes; the other half, in the control condition, wrote about what they did the day before.

While the two groups performed at about the same level for speed and accuracy, the expressive-writing group performed the flanker task more efficiently, meaning they used fewer brain resources, measured with electroencephalography, or EEG, in the process.

Moser uses a car analogy to describe the effect. "Here, worried college students who wrote about their worries were able to offload these worries and run more like a brand new Prius," he said, "whereas the worried students who didn't offload their worries ran more like a '74 Impala – guzzling more brain gas to achieve the same outcomes on the task."

While much previous research has shown that expressive writing can help individuals process past traumas or stressful events, the current study suggests the same technique can help people – especially worriers – prepare for stressful tasks in the future.

"Expressive writing makes the mind work less hard on upcoming stressful tasks, which is what worriers often get "burned out" over, their worried minds working harder and hotter," Moser said. "This technique takes the edge off their brains so they can perform the task with a 'cooler head.'"

View the article Here

- Comments (0)

- Dancing Improves Brain Health in Older Adults

- By Jason von Stietz, M.A.

- September 14, 2017

-

Getty Images Most people have heard that exercise is good for the brain? However, what are the benefits of dancing? A recent study found that a dance program involving teaching older adults a new dance move each session led to not only increases in hippocampal volume but also improvements in maintaining postural control/balance. The study was discussed in a recent article in Medical Express:

"Exercise has the beneficial effect of slowing down or even counteracting age-related decline in mental and physical capacity," says Dr Kathrin Rehfeld, lead author of the study, based at the German center for Neurodegenerative Diseases, Magdeburg, Germany. "In this study, we show that two different types of physical exercise (dancing and endurance training) both increase the area of the brain that declines with age. In comparison, it was only dancing that lead to noticeable behavioral changes in terms of improved balance."

Elderly volunteers, with an average age of 68, were recruited to the study and assigned either an eighteen-month weekly course of learning dance routines, or endurance and flexibility training. Both groups showed an increase in the hippocampus region of the brain. This is important because this area can be prone to age-related decline and is affected by diseases like Alzheimer's. It also plays a key role in memory and learning, as well as keeping one's balance.

While previous research has shown that physical exercise can combat age-related brain decline, it is not known if one type of exercise can be better than another. To assess this, the exercise routines given to the volunteers differed. The traditional fitness training program conducted mainly repetitive exercises, such as cycling or Nordic walking, but the dance group were challenged with something new each week.

Dr Rehfeld explains, "We tried to provide our seniors in the dance group with constantly changing dance routines of different genres (Jazz, Square, Latin-American and Line Dance). Steps, arm-patterns, formations, speed and rhythms were changed every second week to keep them in a constant learning process. The most challenging aspect for them was to recall the routines under the pressure of time and without any cues from the instructor."

These extra challenges are thought to account for the noticeable difference in balance displayed by those participants in dancing group. Dr Rehfeld and her colleagues are building on this research to trial new fitness programs that have the potential of maximizing anti-aging effects on the brain.

"Right now, we are evaluating a new system called "Jymmin" (jamming and gymnastic). This is a sensor-based system which generates sounds (melodies, rhythm) based on physical activity. We know that dementia patients react strongly when listening to music. We want to combine the promising aspects of physical activity and active music making in a feasibility study with dementia patients."

Dr Rehfeld concludes with advice that could get us up out of our seats and dancing to our favorite beat.

"I believe that everybody would like to live an independent and healthy life, for as long as possible. Physical activity is one of the lifestyle factors that can contribute to this, counteracting several risk factors and slowing down age-related decline. I think dancing is a powerful tool to set new challenges for body and mind, especially in older age."

This study falls into a broader collection of research investigating the cognitive and neural effects of physical and cognitive activity across the lifespan.

Read the article Here

- Comments (0)

- Robotic Exoskeleton Improves Walking Ability in Children With Cerebral Palsy

- By Jason von Stietz, M.A.

- August 27, 2017

-

Credit: Northern Arizona University Children with cerebral palsy, a neurological and movement disorder, often limits mobility and independent life functioning in children. Researchers at Northern Arizona University and the National Institutes of Health examined the use of a robotic exoskeleton to improve waling ability in children with cerebral palsy. The study was discussed in a recent article in Medical Xpress:

According to the Centers for Disease Control and Prevention, cerebral palsy (CP)—caused by neurological damage before, during or after birth—is the most common movement disorder in children, limiting mobility and independence throughout their lives. An estimated 500,000 children in the U.S. have CP.

Although nearly 60 percent of children with the disorder can walk independently, many have crouch gait, a pathological walking pattern characterized by excessive knee bending, which can cause an abnormally high level of stress on the knee. Crouch gait can lead to knee pain and progressive loss of function and is often treated through invasive orthopedic surgery.

Assistant professor of mechanical engineering Zach Lerner, who joined Northern Arizona University's Center for Bioengineering Innovation in 2017, recently published a study in the journal Science Translational Medicine investigating whether wearing a robotic exoskeleton—a leg brace powered by small motors—could alleviate crouch gait in children with cerebral palsy.

"We evaluated a novel exoskeleton for the treatment of crouch gait, one of the most debilitating pathologies in CP," Lerner said. "In our exploratory, multi-week trial, we fitted seven participants between the ages of five and 19 with robotic exoskeletons designed to increase their ability to extend their knees at specific phases in the walking cycle."

After being fitted with the assistive devices, the children participated in several practice sessions. At the end of the trial, six of the seven participants exhibited improvements in walking posture equivalent to outcomes reported from invasive orthopedic surgery. The researchers also demonstrated that improvements in crouch increased over the course of the exploratory trial, which was conducted at the National Institutes of Health Clinical Center in Bethesda, Maryland.

"Together, these results provide evidence supporting the use of wearable exoskeletons as a treatment strategy to improve walking in children with CP," Lerner said.

The exoskeleton was safe and well-tolerated, and all the children were able to walk independently with the device. Rather than guiding the lower limbs, the exoskeleton dynamically changed their posture by introducing bursts of knee extension assistance during discrete portions of the walking cycle, which resulted in maintained or increased knee extensor muscle activity during exoskeleton use.

"Our results suggest powered knee exoskeletons should be investigated as an alternative to or in conjunction with existing treatments for crouch gait, including orthopedic surgery, muscle injections and physical therapy," Lerner said.

Lerner leads NAU's Biomechatronics Lab, where his goal is to improve mobility and function in individuals with neuromuscular and musculoskeletal disabilities through innovations in mechanical and biomedical engineering. Building on the encouraging results of this study, his team is working toward conducting longer-term exoskeleton interventions to take place at home and in the community.

View the article Here

- Comments (0)

- Fear-Memories Can Be Erased, Study Finds

- By Jason von Stietz, M.A.

- August 25, 2017

-

Getty Images At times, fear is healthy and necessary. For example, an individual hiking in the woods should feel fear and avoid approaching a bear or other wild animals. However, often times individuals develop unhealthy or unhelpful fears such as a phobia of dogs, even if they are domesticated and friendly. Researchers at University of California, Riverside investigatesd the use of a method of selectively erasing fear-memories by weakening the connections of the neurons involved in the formation of those memories. The study was discussed in a recent article in Medical Xpress:

To survive in a dynamic environment, animals develop fear responses to dangerous situations. But not all fear memories, such as those in PTSD, are beneficial to our survival. For example, while an extremely fearful response to the sight of a helicopter is not a useful one for a war veteran, a quick reaction to the sound of a gunshot is still desirable. For survivors of car accidents, it would not be beneficial for them to relive the trauma each time they sit in a car.

In their lab experiments, Jun-Hyeong Cho, M.D., Ph.D., an assistant professor of molecular, cell, and systems biology, and Woong Bin Kim, his postdoctoral researcher, found that fear memory can be manipulated in such a way that some beneficial memories are retained while others, detrimental to our daily life, are suppressed.

The research, done using a mouse model and published today in Neuron, offers insights into how PTSD and specific phobias may be better treated.

"In the brain, neurons communicate with each other through synaptic connections, in which signals from one neuron are transmitted to another neuron by means of neurotransmitters," said Cho, who led the research. "We demonstrated that the formation of fear memory associated with a specific auditory cue involves selective strengthening in synaptic connections which convey the auditory signals to the amygdala, a brain area essential for fear learning and memory. We also demonstrated that selective weakening of the connections erased fear memory for the auditory cue."

In the lab, Cho and Kim exposed mice to two sounds: a high-pitch tone and a low-pitch tone. Neither tone produced a fear response in the mice. Next, they paired only the high-pitched tone with a mild footshock administered to the mice. Following this, Cho and Kim again exposed the mice to the two tones. To the high-pitch tone (with no accompanying footshock), the mice responded by ceasing all movement, called freezing behavior. The mice showed no such response to the low-pitch sound (with no accompanying footshock). The researchers found that such behavioral training strengthened synaptic connections that relay the high-pitch tone signals to the amygdala.

The researchers then used a method called optogenetics to weaken the synaptic connection with light, which erased the fear memory for the high-pitch tone.

"In the brain, neurons receiving the high- and low-pitch tone signals are intermingled," said Cho, a member of the Center for Glial-Neuronal Interactions in the UC Riverside School of Medicine. "We were able, however, to experimentally stimulate just those neurons that responded to the high-pitch sound. Using low-frequency stimulations with light, we were able to erase the fear memory by artificially weakening the connections conveying the signals of the sensory cue—a high-pitch tone in our experiments - that are associated with the aversive event, namely, the footshock."

Cho explained that for adaptive fear responses to be developed, the brain must discriminate between different sensory cues and associate only relevant stimuli with aversive events.

"This study expands our understanding of how adaptive fear memory for a relevant stimulus is encoded in the brain," he said. "It is also applicable to developing a novel intervention to selectively suppress pathological fear while preserving adaptive fear in PTSD."

The researchers note that their method can be adapted for other research, such as "reward learning" where stimulus is paired with reward. They plan next to study the mechanisms involved in reward learning which has implications in treating addictive behaviors.

Read the article Here

- Comments (1)

- Ritalin's Impact on the Developing Brain

- By Jason von Stietz, M.A.

- July 31, 2017

-

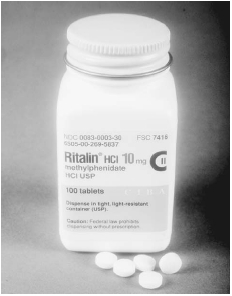

Getty Images Does medication taken to treat ADHD in children have a long-term impact on the brain? Researchers in the Netherlands examined the impact of methylphenidate, or ritalin, on the developing brain. The researchers used magnetic resonance spectroscopy scans to determine that methylphenidate use before the age of 23 related to altered levels of GABA, an inhibitory neurotransmitter, in the medial frontal cortex. The study was discussed in a recent article in Medical Xpress:

“The prevalence of ADHD has increased rapidly over the last few years,” said Michelle M. Solleveld of the University of Amsterdam, the study’s corresponding author. “With this, prescription rates of methylphenidate, also known as Ritalin, are increasing as well. The safety and efficacy of methylphenidate treatment in adults has been studied thoroughly. However, methylphenidate treatment for ADHD is actually more common in children, and no studies so far have investigated the possible long-term effects of methylphenidate on the developing brain.”

“Animal studies show that when animals were treated with methylphenidate during their childhood, persisting effects in the brain remained present well into adulthood,” Solleveld told PsyPost. “This alarmed me, as this hasn’t been studied in humans, but psychiatrists are increasingly prescribing methylphenidate to children to acutely decrease their symptoms of ADHD. I, together with the rest of the research group, therefore wanted to investigate this in humans in order to gain more knowledge about these possible persisting effects.”

The researchers used Magnetic Resonance Spectroscopy scans to examine GABA levels in the medial prefrontal cortex of 44 male ADHD patients. They found evidence that methylphenidate use by children produced long-lasting alterations in GABA neurotransmission in this region of the brain.

“This study focuses on one specific neurotransmitter system: the GABA system,” Solleveld explained. “We report that there are lasting changes in this neurotransmitter system when ADHD patients are treated before the age of 23 years old, i.e. during brain development.”

“This was not the case when treatment was started after the age of 23, when the brain was matured. These results indicate that treatment with methylphenidate in childhood does alter this specific neurotransmitter system in the brain in a long-lasting manner.”

Patients who were treated for the first time with stimulants as children showed significantly lower GABA levels in the medial prefrontal cortex, compared to those who started treatment as adults. A dose of methylphenidate also produced a significant increase in GABA levels in patients treated in childhood, but not in patients treated in adulthood.

But more research is needed to strengthen the preliminary findings and understand its implications.

“We now know that there are lasting alterations in the GABA system in the brain after treatment during brain development,” Solleveld told PsyPost. “We do however not know yet whether and how this is expressed in for example a person’s behavior, performance on psychological tests or other personal characteristics.

“Additionally, we only focused on a specific neurotransmitter system, in only 1 voxel (‘cube’ of 2.5×3.5×2.5cm) in the brain, namely in the medial prefrontal cortex. Future studies should address other systems in the brain or focus on behavioral aspects of possible lasting effects of methylphenidate treatment during brain development.”

“This study is part of a larger project, called the ‘effects of Psychotropic drugs on Developing brain (ePOD),'” Solleveld added. “This study consists of a randomized clinical trial part, in which 50 children and 49 adults were treated for 4 months with either methylphenidate or placebo, and a cross-sectional study. We are currently analyzing all the data of both the cross sectional study and the clinical trial, and expect to report more on this topic in the near future. Please find another publication of our group here: http://jamanetwork.com/journals/jamapsychiatry/fullarticle/2538518.”

The study, “Age-dependent, lasting effects of methylphenidate on the GABAergic system of ADHD patients“, was also co-authored by Anouk Schrantee, Nicolaas A.J. Puts, Liesbeth Reneman, and Paul J. Lucassen.

Read the original article Here

- Comments (0)

- Poverty and Malnutrition's Affect on the Brain

- By Jason von Stietz, M.A.

- July 25, 2017

-

Nature Researchers in Dhaka Bangladesh are studying the link between stunted growth related to impoverished conditions and brain development. About 40% of children in Dhaka suffer from stunted growth by the age of two and findings from magnetic resonance imaging scans show these children to have significantly lower smaller volumes of grey matter than their healthy counterparts. The study was discussed in a recent article in Nature:

In the late 1960s, a team of researchers began doling out a nutritional supplement to families with young children in rural Guatemala. They were testing the assumption that providing enough protein in the first few years of life would reduce the incidence of stunted growth.

It did. Children who got supplements grew 1 to 2 centimetres taller than those in a control group. But the benefits didn't stop there. The children who received added nutrition went on to score higher on reading and knowledge tests as adolescents, and when researchers returned in the early 2000s, women who had received the supplements in the first three years of life completed more years of schooling and men had higher incomes.

“Had there not been these follow-ups, this study probably would have been largely forgotten,” says Reynaldo Martorell, a specialist in maternal and child nutrition at Emory University in Atlanta, Georgia, who led the follow-up studies. Instead, he says, the findings made financial institutions such as the World Bank think of early nutritional interventions as long-term investments in human health.

Since the Guatemalan research, studies around the world — in Brazil, Peru, Jamaica, the Philippines, Kenya and Zimbabwe — have all associated poor or stunted growth in young children with lower cognitive test scores and worse school achievement2.

A picture slowly emerged that being too short early in life is a sign of adverse conditions — such as poor diet and regular bouts of diarrhoeal disease — and a predictor for intellectual deficits and mortality. But not all stunted growth, which affects an estimated 160 million children worldwide, is connected with these bad outcomes. Now, researchers are trying to untangle the links between growth and neurological development. Is bad nutrition alone the culprit? What about emotional neglect, infectious disease or other challenges?

Shahria Hafiz Kakon is at the front line trying to answer these questions in the slums of Dhaka, Bangladesh, where about 40% of children have stunted growth by the age of two. As a medical officer at the International Centre for Diarrhoeal Disease Research, Bangladesh (icddr,b) in Dhaka, she is leading the first-ever brain-imaging study of children with stunted growth. “It is a very new idea in Bangladesh to do brain-imaging studies,” says Kakon.

The research is innovative in other respects, too. Funded by the Bill & Melinda Gates Foundation in Seattle, Washington, it is one of the first studies to look at how the brains of babies and toddlers in the developing world respond to adversity. And it promises to provide important baseline information about early childhood development and cognitive performance.

Kakon and her colleagues have run magnetic resonance imaging (MRI) tests on two- and three-month-old children, and identified brain regions that are smaller in children with stunted growth than in others. They are also using other tests, such as electroencephalography (EEG).

“Brain imaging could potentially be really helpful” as a way to see what is going on in the brains of these young children, says Benjamin Crookston, a health scientist at Brigham Young University in Provo, Utah, who led studies in Peru and other low-income countries that reported a link between poor growth and cognitive setbacks.

The long shadow of stunting

In 2006, the World Health Organization (WHO) reported an extensive study to measure the heights and weights of children between birth and the age of five in Brazil, Ghana, India, Norway, Oman and the United States3. The results showed that healthy, well-fed children the world over follow a very similar growth trajectory, and it established benchmarks for atypical growth. Stunted growth, the WHO decided, is defined as two standard deviations below the median height for a particular age. Such a difference can seem subtle. At 6 months old, a girl would be considered to have stunted growth if she was 61 centimetres long, even though that is less than 5 centimetres short of the median.

The benchmarks helped to raise awareness about stunting. In many countries, more than 30% of children under five meet the definition; in Bangladesh, India, Guatemala and Nigeria, over 40% do. In 2012, growing consensus about the effects of stunting motivated the WHO to pledge to reduce the number of children under five with stunted growth by 40% by 2025.

Even as officials started to take action, researchers realized there were serious gaps in protocols to identify the problems related to stunting. Many studies of brain development relied on tests of memory, speech and other cognitive functions that are ill-suited to very young children. “Babies do not have much of a behavioural repertoire,” says Michael Georgieff, a paediatrician and child psychologist at the University of Minnesota in Minneapolis. And if parents and doctors have to wait until children are in school to notice any differences, it will probably be too late to intervene.

That's where Kakon's work fits in. At 1.63 metres, she is not tall by Western standards, but at the small apartment-building-turned-clinic in Dhaka where she works, she towers over most of her female colleagues. On a recent morning she was with a mother who had phoned her in the middle of the night: the woman's son had a fever. Before examining the boy, Kakon asked his mother how the family was and how he was doing at school, as she usually does. Many parents call Kakon apa — a Bengali word for big sister.

About five years ago, the Gates Foundation became interested in tracking brain development in young children living with adversity, especially stunted growth and poor nutrition. The foundation had been studying children's responses to vaccines at Kakon's clinic. The high rate of stunting, along with the team's strong bonds with participants, clinched the deal.

To get the study off the ground, the foundation connected the Dhaka team with Charles Nelson, a paediatric neuroscientist at Boston Children's Hospital and Harvard Medical School in Massachusetts. He had expertise in brain imaging — and in childhood adversity. In 2000, he began a study tracking the brain development of children who had grown up in harsh Romanian orphanages. Although fed and sheltered, the children had almost no stimulation, social contact or emotional support. Many have experienced long-term cognitive problems.

Nelson's work revealed that the orphans' brains bear marks of neglect. MRIs showed that by the age of eight, they had smaller regions of grey and white matter associated with attention and language than did children raised by their biological families4. Some children who had moved from the orphanages into foster homes as toddlers were spared some of the deficits.

The children in the Dhaka study have a completely different upbringing. They are surrounded by sights, sounds and extended families who often all live together in tight quarters. It is the “opposite of kids lying in a crib, staring at a white ceiling all day”, says Nelson.

But the Bangladeshi children do deal with inadequate nutrition and sanitation. And researchers hadn't explored the impacts of such conditions on cerebral development. There are brain-imaging studies of children growing up in poverty — which, like stunting, could be a proxy for inadequate nutrition6. But these have mostly focused on high-income areas, such as the United States, Europe and Australia. No matter how poor the children there are, most have some nutritious foods, clean water and plumbing, says Nelson. Those in the Dhaka slums live and play around open canals of sewage. “There are many more kids like the kids in Dhaka around the world,” he says. “And we knew nothing about them from a brain level.”

The marks of adversity

By early 2015, Nelson's team and the Bangladeshi researchers had transformed the humble Dhaka clinic into a state-of-the-art lab. For their EEG equipment, they had to find a room with no wires in the walls and without air-conditioning units, both of which could interfere with the device's ability to detect activity in the brain.

The researchers also set up a room for functional near-infrared spectroscopy (fNIRS), in which children wear a headband of sensors that measure blood flow in the brain. The technique gives information about brain activity similar to that from functional MRI, but does not require a large machine and the children do not have to remain motionless. fNIRS has been used in infants since the late 1990s, and is now gaining traction in low-income settings

The researchers are also performing MRIs, at a hospital near the clinic. So far, they have scanned 12 babies aged 2 to 3 months with stunted growth. Similar to the Romanian orphans and the children growing up in poverty in developed countries, these children have had smaller volumes of grey matter than a group of 20 non-stunted babies. It is “remarkably bad”, Nelson says, to see these differences at such a young age. It's hard to tell which regions are affected in such young children, but having less grey matter was associated with worse scores on language and visual-memory tests at six months old.

Some 130 children in the Dhaka study had fNIRS tests at 36 months old, and the researchers saw distinct patterns of brain activity in those with stunting and other adversity. The shorter the children were, the more brain activity they had in response to images and sounds of non-social stimuli, such as trucks. Taller children responded more to social stimuli, such as women's faces. This could suggest delays in the process by which brain regions become specialized for certain tasks, Nelson says.

EEG detected stronger electrical activity among children with stunted growth, along with a range of brainwaves that reflect problem solving and communication between brain regions. That was a surprise to the researchers, because studies in orphans and poor children have generally found dampened activity7. The discrepancy could be related to the different types of adversity that children in Dhaka face, including food insecurity, infections and mothers with high rates of depression.

Nelson's team is trying to parse out which forms of adversity seem to be most responsible for the differences in brain activity among the Dhaka children. The enhanced electrical signals in EEG tests are strongly linked to increases in inflammatory markers in the blood, which probably reflect greater exposure to gut pathogens.

If this holds up as more children are tested, it could point to the importance of improving sanitation and reducing gastrointestinal infections. Or maternal depression could turn out to be strongly linked to brain development, in which case helping mothers could be just as crucial as making sure their babies have good nutrition. “We don't know the answers yet,” says Nelson.

The participants tested at 36 months are now around 5 years old, and the team is getting ready to take some follow-up measurements. These will give an idea of whether or not the children have continued on the same brain-development trajectory, Nelson says. The researchers will also give the five year olds IQ and school-readiness tests to gauge whether the earlier measurements were predictive of school performance.

A better baseline

One of the challenges of such studies is that researchers are still trying to work out what normal brain development looks like. A few years before the Dhaka study began, a team of British and Gambian researchers geared up to do EEG and fNIRS testing on children in rural Gambia during the first two years of life. They were also funded by the Gates Foundation.

Similar to the Dhaka study, the researchers are looking at how brain development is related to a range of measures, including nutrition and parent–child interaction. But along the way, they are trying to define a standard trajectory of brain function for children.

There is a big push at the Gates Foundation and the US National Institutes of Health to nail down that picture of normal brain development, says Daniel Marks, a paediatric neuroscientist at Oregon Health & Science University in Portland, and a consultant for the foundation. “It is just a reflection of the urgency of the problem,” he says.

One of the hopes for the Dhaka study, and the motivation for funding it, is that it will reveal distinct patterns in babies' brains that are predictive of poor outcomes later in life and could be used to see whether interventions are working, says Jeff Murray, a deputy director of discovery and translational sciences at the Gates Foundation.

Any such intervention will probably have to include nutrition, says Martorell. He and his colleagues are doing yet another follow-up study of the Guatemalan villagers to see whether those who got protein supplements before the age of 7 have lower rates of heart disease and diabetes 40 years later. But nutrition alone is unlikely to be enough — either to prevent stunting or to promote normal cognitive development, Martorell says. So far, the most successful nutritional interventions have helped to overcome about one-third of the typical height deficit. And such programmes can be very expensive; in the Guatemalan study, for example, the researchers ran special centres to provide supplements.

Nevertheless, researchers are striving to improve interventions. A group involved in the vaccine study in Bangladesh is planning to test supplements in pregnant women in the hope of boosting babies' birth weight and keeping their growth on track in the crucial first two years of life. Tahmeed Ahmed, senior director of nutrition and clinical services at the diarrhoeal-disease research centre, is planning a trial of foods such as bananas and chickpeas, to try to promote the growth of good gut bacteria in Bangladeshi children aged 12 to 18 months. A healthy bacterial community could make the gut less vulnerable to infections that interfere with nutrient absorption and that ramp up inflammation in the body.

Ultimately, it's not about whether children have stunted growth or even what their brains look like. It's about what their lives are like as they grow older. Studies such as the one in Dhaka strive to help determine whether interventions are working sooner rather than later. “If you have to wait until kids are 25 years old to see whether they are employed,” Murray says, “it could take you 25 years to do every study.”

Read the original article Here

- Comments (0)

- fMRI Predicts And Monitors Change in PTSD Symptoms Following Psychotherapy

- By Jason von Stietz, M.A.

- July 19, 2017

-

Researchers from Stanford University School of Medicine investigated the use of brain imaging to predict the effectiveness of psychotherapy and measure changes in the brain following treatment. The researchers utilized functional magnetic resonance imaging (fMRI) with 66 participants diagnosed with post-traumatic stress disorder (PTSD), who were undergoing exposure therapy. The findings were discussed in a recent article in MedicalXpress:

PTSD is a serious mental disorder that can develop after a dangerous or traumatic event. Patients experience recurring memories of the event; avoid situations, people or thoughts that remind them of the event; and experience altered mood and thinking patterns. Nearly 7 percent of people in the United States will suffer from PTSD at some point in their lifetime, according to the National Institute of Mental Health.

Prolonged exposure therapy for PTSD consists of a series of sessions and homework assignments that lead patients to gradually approach trauma-related memories and situations. Patients begin by imagining scenarios that trigger their PTSD symptoms—such as a crowded park. Then, they work up to deliberately putting themselves in those scenarios. Revisiting traumatic experiences in this manner can, over time, allow the brain to slowly reduce its response to emotional triggers. However, not all PTSD patients derive benefit from the treatment, and about a third drop out of the arduous process, according to Etkin, who is also an investigator at the Veterans Affairs Palo Alto Health Care System. About two-thirds of patients receiving prolonged exposure therapy see a 50 percent reduction in symptoms, and 40 percent of them achieve remission, he said.

To learn how prolonged exposure therapy works in the brain, the studies used functional magnetic resonance imaging to measure the brain activity of 66 patients diagnosed with PTSD as they completed five tasks tapping a variety of emotional and cognitive functions. During these tasks, patients would view, for example, images of faces or scenes—like happy, neutral or scared faces or a scene depicting an event meant to induce negative emotions, such as an argument or physical violence—and either respond to questions about the images or try to control their response to the image's content.

After the initial brain imaging, about half the participants underwent nine to 12 sessions of prolonged exposure therapy; the remainder did not. At the end of the trial, all participants went through the same emotional response and regulation tests while researchers measured brain activity.

A step closer to personalized treatment

One of the studies focused on whether brain activity levels before treatment could help scientists predict which participants would respond well to prolonged exposure therapy. The researchers measured how active certain brain regions were during the five tasks and looked for associations with reduced symptoms post-treatment.

Prior to receiving prolonged exposure therapy, patients with both lower activity in the amygdala and higher activity in various regions of the frontal lobe while viewing faces with fearful expressions showed a larger reduction in PTSD symptoms following therapy. Fonzo refers to the amygdala, seated deep within a primitive region of the brain, as the brain's alarm system, as it plays an important role in fear and other emotional responses. The frontal lobe is the outer layer of the human brain in the area behind the forehead; it plays a role in complex functions such as behavior, personality and decision-making.

The researchers also found that patients with greater activation in a deep region of the frontal lobe when ignoring the distracting effects of conflicting emotional information—such as a picture of a scared face with the word "happy" written across it—responded better to exposure therapy.

"The better able the brain is at deploying attention- and emotion-controlling processes, the better you respond to treatment," said Fonzo.

Using this information about how the brain responds in emotional regulation and processing tasks, the team was able to predict how effective prolonged exposure therapy treatment would be for patients with up to 95.5 percent accuracy. This kind of screening approach, perhaps using the less expensive and more widely available electroencephalogram rather than fMRI, could help doctors determine the best course of PTSD therapy in the future, the researchers said.

"Not only could it provide a ray of hope for patients who would benefit from prolonged exposure to make it through the tough course," said Goodkind, "it means patients who wouldn't derive a benefit wouldn't have to start the treatment."

Therapy changes the brain

In the second study, the researchers found that prolonged exposure therapy led to lasting changes in participants' brains that were associated with improvement in PTSD symptoms. About four weeks after therapy ended, fMRI showed elevated activity in the front-most region of the frontal lobe, an area called the frontopolar cortex. This region is the most recently evolved part of the human brain. It balances internal and external attention and helps coordinate multiple processes in the brain simultaneously, said Fonzo, as would occur when multitasking and remembering future to-do list items.

The role the frontopolar cortex plays in prolonged exposure therapy was surprising, Fonzo said, because much of the scientific attention on emotional processes in PTSD has centered on the amygdala.

Specifically, the changes the researchers observed in frontopolar cortex activity occurred when participants were instructed to regulate their emotional response to an image of a negative or stressful scenario, such as one depicting an argument. The researchers also noted changes in frontopolar cortex activity in these participants during a resting, nonfocused state. The changes in frontopolar cortex activity indicate a shift in frontopolar function following therapy, according to the authors.

The post-therapy brain changes also included increased connectivity between the frontopolar cortex and deeper brain regions closer to emotional processing areas. The authors wrote that psychotherapy may train the frontopolar cortex "to better evoke or amplify attention toward an internal regulatory process that mediates successful emotion regulation."

The degree to which activity in the frontopolar cortex increased following therapy was associated with the degree of improvement in PTSD symptoms and emotional well-being.

Exploring use of transcranial magnetic stimulation

To confirm whether the frontopolar cortex controls important brain regions for emotional processing, the team used a noninvasive method of stimulating brain activity called transcranial magnetic stimulation, or TMS, to activate the frontopolar cortex in healthy people. They simultaneously recorded brain activity with fMRI and confirmed that the frontopolar cortex modulated downstream activity in lower cortical regions closer to emotion-processing parts of the brain.

They also explored whether TMS might help PTSD patients respond to prolonged exposure treatment. Building off their findings that greater frontal lobe and less amygdala activation predicts better treatment outcome, the researchers activated a region of the frontal cortex with TMS probes while imaging the brain. They found that doing so inhibited activity in the amygdala, and the degree to which that happened also predicted the degree to which a patient's symptoms improved. In the future, stimulating this region may help increase patients' responsiveness to psychotherapy, they said. Indeed, some small-scale studies in which therapeutic TMS was used daily on the same region of the frontal cortex, without the addition of psychotherapy, have already shown promising results.

"These findings put a place marker in our understanding of psychotherapy writ large. We can really put psychiatric disorders on the map in terms of hard science and help fight the stigma that surrounds these illnesses and their treatment," said Etkin. "Within the field of PTSD, it gives a concrete sense of hope for people undergoing treatment and starts laying the groundwork for new treatments based on understanding brain circuitry."

Read the original article Here

- Comments (0)

- Journalist Undergoes QEEG And Discusses Experience

- By Jason von Stietz, M.A.

- June 30, 2017

-

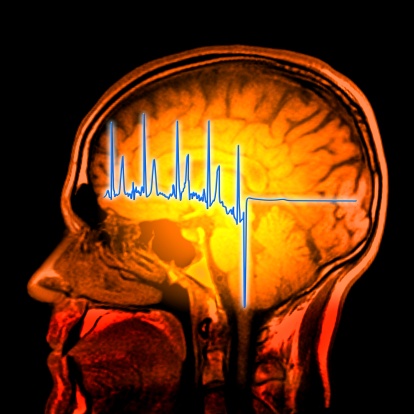

Getty Images What is the layperson’s perception of quantitative EEG (qEEG) or brain mapping? Does it seem exciting or scary? Does the average person view the qEEG results has a means of self-discovery or as invalidating to their sense of self? Recently, a journalist underwent a brain mapping and discussed her results with QEEG experts Cynthia Cerson, Ph.D. and Jay Gunkelman, QEEG-D. Her experiences were discussed in a recent article in The Atlantic:

The woman who would be mapping my brain, Cynthia Kerson, had tanned, toned arms and long silvery hair worn loose. Her home office featured an elegant calligraphy sign reading “BREATHE,” and also a mug that said “I HAVE THE PATIENCE OF A SAINT—SAINT CUNTY MCFUCKOFF.”

Kerson is a neurotherapist, which means she practices a form of alternative therapy that involves stimulating brain waves until they reach a specific frequency. Neurotherapy has a questionable reputation, which its practitioners sometimes try to counter by putting as many acronyms next to their names as possible. Kerson comes with a Ph.D., QEEGD, BCN, and BCB. She’s also past president of the Biofeedback Society of California and teaches at Saybrook University. Even so, somehow it was the tension between those two pieces of office ephemera that made me instinctively want to trust her.

Kerson used to have a clinic in Marin County, where she primarily saw children with ADHD, using neurotherapy techniques to help them learn to focus. But she also worked with elite athletes who wanted to improve their performance, as well as people suffering from chronic pain and anxiety and schizophrenia and a host of other disorders. These days, she’s so busy teaching and consulting that she no longer runs her individual practice, but she agreed to bring out her brain-mapping equipment for me: snug-fitting cloth caps in various sizes; a tube of Electrogel, a conductive goo; a black box made by BrainMaster Technologies that would receive my brain’s signals and spit them out into her computer.

I’m the kind of person who procrastinates with personality tests; I’m susceptible to the way they target that place where self-loathing and narcissism overlap. I suppose it stems from the feeling that there is something uniquely and specially wrong with me, and wanting to know all about it.

So I’ll admit that I was thinking of this brain map in overly fanciful terms: It would be like a personality test but scientific. I kept thinking about this line I’d read in a book by Paul Swingle, a Canadian psychoneurophysiologist who uses brain maps to identify neurological abnormalities: “The brain tells us everything.”

Kerson placed the cap on my head and clipped two sensors on to my earlobes, areas of no electrical activity, to act as baselines. As she began Electrogelling the 19 spots on my head that aligned with the cap’s electrodes, I was nervous in two different directions: one, that my brain would be revealed as suboptimal, underfunctioning, deficient. The other, that it would be fine, average, unremarkable.

* * *

EEG tests, which measure electrical signals in the brain, have been used for decades by physicians to look for anomalies in brain-wave patterns that might indicate stroke or traumatic brain injury. The kind of brain map I was getting used a neuroimaging technique formally known as quantitative electroencephalogram, or qEEG. It follows the same general principle as EEG tests, but adds a quantitative element: Kerson would compare my brain waves against a database of conventionally functioning, or “neurotypical,” brains. Theoretically, this allows clinicians to pick up on more subtle deviations—brain-wave forms that are associated with cognitive inflexibility, say, or impulsivity.

In neurotherapy, qEEGs are generally a precursor to treatments like neurofeedback or deep brain stimulation, which are used to alter brain waves, or to train people to change their own. Neurotherapy claims it can tackle persistent depression or PTSD or anger issues without resorting to talk therapy or pharmaceutical interventions, by addressing the very neural oscillations that underlie these problems. If you see your brain function in real time, the idea goes, you can trace mental-health issues to their physiological roots—and make direct interventions.

But critics argue that neurotherapy’s treatments—which might take dozens of sessions, each costing hundreds of dollars—have very little research backing them up. And although the mainstream medical community is starting to pay closer attention to the field, particularly in Europe, in the U.S. neurotherapy is still largely unregulated, with practitioners of varying levels of expertise offering treatments in outpatient clinics. At the most basic level, not everyone who’s invested in the technology that allows them to do qEEG testing is able to correctly interpret the resulting brain map. Certification to administer a qEEG test—a process overseen by the International qEEG Certification Board—requires only 24 hours of training, five supervised evaluations, and an exam, with no prior medical experience.

As Jay Gunkelman, an EEG expert and past president of the International Society for Neurofeedback and Research, puts it: “It’s a Wild West, buyer-beware situation out there.”

All this is to say that while skilled interpreters can pick up all sorts of information from an EEG, these tests are also “ripe for overstatement,” according to Michelle Harris-Love, a neuroscientist at Georgetown’s Center for Brain Plasticity and Recovery. That’s worrisome since, in recent years, EEG technology has gotten cheaper and more widely available. A qEEG brain map can cost as little as a few hundred dollars, which means more people are taking a peek at their brain waves, not just for diagnostic purposes, but also with optimization in mind.

“People will come in for optimal training,” Kerson told me as she adjusted the sensors in my cap. “But what often happens is we’ll find something a little pathological. Which I guess depends on your definition of pathological.”

NeuroAgility, an “attention and performance psychology” clinic in Boulder, Colorado, for instance, brainmaps CEOs and then uses neurotherapy to help them “come from a place of action, rather than reaction.” Other clinics promise to use the technology to help athletes and actors get in the zone, as Kerson did in her private practice. “There are business executives who want to reduce their obsessive-compulsive traits, or athletes who want to tune up their engines,” Gunkelman told me. “At Daytona, they’re all fabulous cars, but every single one of them gets a tune up three times a day. No matter who you are, if you look at brain activity, there are things we can do to get you to function better.”

* * *

For the first five minutes my brain was being mapped, I sat with my eyes closed. My mind felt unquiet; I was thinking about what it felt like to have a brain, trying to describe to myself the feeling of having thoughts. “Your eyes are moving around a lot underneath your lids,” Kerson said. She suggested I put my fingertips on my eyelids to keep my eyes from shifting. I sat like the see-no-evil monkey for the rest of the test, trying to remain thoughtless and keep my jumpy eyes still.

When the first half of the test was done, I spied my brain waves on Kerson’s computer screen: 19 thin, wobbly gray lines stretching across a white background. My brain activity looked like an Agnes Martin painting. Kerson had me turn the chair around for the second, eyes-open half, in case watching the real-time brain waves made me self-conscious. Her software program chimed out a warning every time I blinked, which turned out to be a lot. “I’m going to turn off the sound so you don’t get frustrated,” Kerson said.

When we were done, she scrolled through the 10 minutes of brain waves. Two of the lines looked alarming—every few seconds they jolted all over the place, like some sort of seismic indication of an internal earthquake. Kerson told me not to worry; the EEG also picks up on muscle movements, and those were my blinks.

“So there’s one thing I see right off the bat,” she said. “We’d expect to see more alpha when you close your eyes. But it actually looks pretty similar whether your eyes are open or closed. That tells me that you might not sleep well, you might have some anxiety, you might be overly sensitive—your brain talks to itself a lot. You can’t quiet yourself.” This was all accurate, if not news to me.

Kerson continued to scan through the test, selecting sections that weren’t compromised by my blinks, trying to gather enough clean data to match against the database. She ran the four good minutes through the program, which spat out an analysis of my brain waves that looked something like a heat map, with areas of relative over- and under-functioning indicated by patches of color. By most measures, my brain appeared a moderate, statistically insignificant green. “You’re neurotypical,” she said, sounding minorly disappointed.

Kerson nonetheless recommended vitamins to beef up my neural connections, since my amplitudes were a little lackluster. “Meditating would be good for you, but you’re going to need something else for meditation to work,” she told me, noting that I should consider some alpha training, which would involve putting on headphones to listen to sounds that would get my brain waves into the right frequency. I should also probably change out my contacts if I was blinking that much.

Kerson began folding up the electrode-studded cap, and I realized with a slight feeling of deflation that that was it. “It was nice to meet you,” she called out as I pulled out of her driveway. “And it was nice to meet your brain!”

* * *

A qEEG may not be anything like a personality test, but it still left me with the same unsatisfied feeling of being parsed and analyzed but still fundamentally unknown. My mind had been mapped, I had seen the shape of my brain waves, but I didn’t have any new or better understanding of my galloping, anxious brain, or what happens on those afternoons where I lose hours to online personality tests. Instead, I was just left with the vague sense that in some deep and essential way, I wasn’t performing as well as I could be.

I decided to seek out a second opinion from Gunkelman, whom several people had described to me as the go-to guy for interpreting EEGs. Gunkelman worked as an EEG tech in a hospital for decades, he told me. “In the early 1990s, I figured out that I had read 500,000 EEGs,” he said. “And then I stopped counting.” When he looked over my results, he grumbled about not having enough data to work with; for a proper brain map, he needed at least 10 minutes each with eyes open and closed, he said. But he nonetheless zipped through the EEG readout with the confidence of someone who’s done this more than half a million times before.

Like Kerson, Gunkelman zeroed in on my alpha. “When you close your eyes, you expect to see alpha in the back of the head, and we’re not really seeing that,” he said. That meant that my visual processing systems weren’t resting when my eyes were closed—the same inability to quiet down that Kerson had noticed. He also saw evidence of light drowsiness: “With an EEG, we can tell exactly how vigilant you are,” he said. He was right; I had been sleepy that day.

Then, perhaps to throw my drowsy, overactive brain a bone, Gunkelman noted some nice things about my alpha, too. “The alpha here is 11 or 12 hertz, a little faster than average,” he said, which generally correlated with better memory of facts and experiences. But if I wanted optimal functioning, he agreed with Kerson that some alpha training would help teach my brain to chill out so I could sleep better and be maximally alert during the day.

There had been something appealing to my anxious, over-alphaed brain about having yet another way to think of myself as an underperforming machine that could be tweaked and tuned up. But in the end, hearing Gunkelman describe my brain waves in such clinical terms had the opposite effect. I felt protective of all the ways my brain was still a mystery to me, and everything the brain map couldn’t show.

I’ve kept one of my brain-map images as my desktop background. I’m not sure why I feel attached to it; I couldn’t pick it out of a lineup of other brains, and I didn’t really learn anything new about myself from the experience—the map is not the territory, as they say. But even so, I still like looking at it: my speedy, drowsy, neurotypical, not-quite-optimal brain.

Read the origina article Here

- Comments (0)

- Power Results in Less Mirroring and Reading Emotions

- By Jason von Stietz, M.A.

- June 27, 2017

-

Getty Images Are the rich and powerful out of touch? Why do leaders sometimes seem clueless about the needs of those they lead? Recent research found that those in power often stop using social skills, such as mirroring and “reading” people’s emotions, that were necessary for them to attain power. The findings were discussed in a recent article in the Atlantic:

When various lawmakers lit into John Stumpf at a congressional hearing last fall, each seemed to find a fresh way to flay the now-former CEO of Wells Fargo for failing to stop some 5,000 employees from setting up phony accounts for customers. But it was Stumpf’s performance that stood out. Here was a man who had risen to the top of the world’s most valuable bank, yet he seemed utterly unable to read a room. Although he apologized, he didn’t appear chastened or remorseful. Nor did he seem defiant or smug or even insincere. He looked disoriented, like a jet-lagged space traveler just arrived from Planet Stumpf, where deference to him is a natural law and 5,000 a commendably small number. Even the most direct barbs—“You have got to be kidding me” (Sean Duffy of Wisconsin); “I can’t believe some of what I’m hearing here” (Gregory Meeks of New York)—failed to shake him awake.

What was going through Stumpf’s head? New research suggests that the better question may be: What wasn’t going through it?

The historian Henry Adams was being metaphorical, not medical, when he described power as “a sort of tumor that ends by killing the victim’s sympathies.” But that’s not far from where Dacher Keltner, a psychology professor at UC Berkeley, ended up after years of lab and field experiments. Subjects under the influence of power, he found in studies spanning two decades, acted as if they had suffered a traumatic brain injury—becoming more impulsive, less risk-aware, and, crucially, less adept at seeing things from other people’s point of view.

Sukhvinder Obhi, a neuroscientist at McMaster University, in Ontario, recently described something similar. Unlike Keltner, who studies behaviors, Obhi studies brains. And when he put the heads of the powerful and the not-so-powerful under a transcranial-magnetic-stimulation machine, he found that power, in fact, impairs a specific neural process, “mirroring,” that may be a cornerstone of empathy. Which gives a neurological basis to what Keltner has termed the “power paradox”: Once we have power, we lose some of the capacities we needed to gain it in the first place.

That loss in capacity has been demonstrated in various creative ways. A 2006 study asked participants to draw the letter E on their forehead for others to view—a task that requires seeing yourself from an observer’s vantage point. Those feeling powerful were three times more likely to draw the E the right way to themselves—and backwards to everyone else (which calls to mind George W. Bush, who memorably held up the American flag backwards at the 2008 Olympics). Other experiments have shown that powerful people do worse at identifying what someone in a picture is feeling, or guessing how a colleague might interpret a remark.

The fact that people tend to mimic the expressions and body language of their superiors can aggravate this problem: Subordinates provide few reliable cues to the powerful. But more important, Keltner says, is the fact that the powerful stop mimicking others. Laughing when others laugh or tensing when others tense does more than ingratiate. It helps trigger the same feelings those others are experiencing and provides a window into where they are coming from. Powerful people “stop simulating the experience of others,” Keltner says, which leads to what he calls an “empathy deficit.”

Mirroring is a subtler kind of mimicry that goes on entirely within our heads, and without our awareness. When we watch someone perform an action, the part of the brain we would use to do that same thing lights up in sympathetic response. It might be best understood as vicarious experience. It’s what Obhi and his team were trying to activate when they had their subjects watch a video of someone’s hand squeezing a rubber ball.

For nonpowerful participants, mirroring worked fine: The neural pathways they would use to squeeze the ball themselves fired strongly. But the powerful group’s? Less so.

Was the mirroring response broken? More like anesthetized. None of the participants possessed permanent power. They were college students who had been “primed” to feel potent by recounting an experience in which they had been in charge. The anesthetic would presumably wear off when the feeling did—their brains weren’t structurally damaged after an afternoon in the lab. But if the effect had been long-lasting—say, by dint of having Wall Street analysts whispering their greatness quarter after quarter, board members offering them extra helpings of pay, and Forbes praising them for “doing well while doing good”—they may have what in medicine is known as “functional” changes to the brain.

I wondered whether the powerful might simply stop trying to put themselves in others’ shoes, without losing the ability to do so. As it happened, Obhi ran a subsequent study that may help answer that question. This time, subjects were told what mirroring was and asked to make a conscious effort to increase or decrease their response. “Our results,” he and his co-author, Katherine Naish, wrote, “showed no difference.” Effort didn’t help.

This is a depressing finding. Knowledge is supposed to be power. But what good is knowing that power deprives you of knowledge?

The sunniest possible spin, it seems, is that these changes are only sometimes harmful. Power, the research says, primes our brain to screen out peripheral information. In most situations, this provides a helpful efficiency boost. In social ones, it has the unfortunate side effect of making us more obtuse. Even that is not necessarily bad for the prospects of the powerful, or the groups they lead. As Susan Fiske, a Princeton psychology professor, has persuasively argued, power lessens the need for a nuanced read of people, since it gives us command of resources we once had to cajole from others. But of course, in a modern organization, the maintenance of that command relies on some level of organizational support. And the sheer number of examples of executive hubris that bristle from the headlines suggests that many leaders cross the line into counterproductive folly.

Less able to make out people’s individuating traits, they rely more heavily on stereotype. And the less they’re able to see, other research suggests, the more they rely on a personal “vision” for navigation. John Stumpf saw a Wells Fargo where every customer had eight separate accounts. (As he’d often noted to employees, eight rhymes with great.) “Cross-selling,” he told Congress, “is shorthand for deepening relationships.”

Is there nothing to be done?

No and yes. It’s difficult to stop power’s tendency to affect your brain. What’s easier—from time to time, at least—is to stop feeling powerful.

Insofar as it affects the way we think, power, Keltner reminded me, is not a post or a position but a mental state. Recount a time you did not feel powerful, his experiments suggest, and your brain can commune with reality.

Recalling an early experience of powerlessness seems to work for some people—and experiences that were searing enough may provide a sort of permanent protection. An incredible study published in The Journal of Finance last February found that CEOs who as children had lived through a natural disaster that produced significant fatalities were much less risk-seeking than CEOs who hadn’t. (The one problem, says Raghavendra Rau, a co-author of the study and a Cambridge University professor, is that CEOs who had lived through disasters without significant fatalities were more risk-seeking.)

But tornadoes, volcanoes, and tsunamis aren’t the only hubris-restraining forces out there. PepsiCo CEO and Chairman Indra Nooyi sometimes tells the story of the day she got the news of her appointment to the company’s board, in 2001. She arrived home percolating in her own sense of importance and vitality, when her mother asked whether, before she delivered her “great news,” she would go out and get some milk. Fuming, Nooyi went out and got it. “Leave that damn crown in the garage” was her mother’s advice when she returned.

The point of the story, really, is that Nooyi tells it. It serves as a useful reminder about ordinary obligation and the need to stay grounded. Nooyi’s mother, in the story, serves as a “toe holder,” a term once used by the political adviser Louis Howe to describe his relationship with the four-term President Franklin D. Roosevelt, whom Howe never stopped calling Franklin.

Read the original article Here

- Comments (0)

- More Evidence Aerobic Exercise Improves Brain Functioning

- By Jason von Stietz, M.A.

- June 12, 2017

-

Getty Images Research suggesting that aerobic exercise improves mood and brain functioning continues to mount. One recent study found that 30 minutes of walking on a treadmill for 10 consecutive days could lead to a clinically significant improvement in mood. This study was among many that were reviewed in a recent article in Science Alert:

"Aerobic exercise is the key for your head, just as it is for your heart," write the authors of a recent article in the Harvard Medical School blog, Mind and Mood.

While some of the benefits, like a lift in mood, can emerge as soon as a few minutes into a sweaty bike ride, others, like improved memory, might take several weeks to crop up.

That means that the best type of fitness for your mind is any aerobic exercise that you can do regularly and consistently for at least 45 minutes at a time.

Depending on which benefits you're looking for, you might try adding a brisk walk or a jog to your daily routine. A pilot study in people with severe depression found that just 30 minutes of treadmill walking for 10 consecutive days was "sufficient to produce a clinically relevant and statistically significant reduction in depression."

Aerobic workouts can also help people who aren't suffering from clinical depression feel less stressed by helping to reduce levels of the body's natural stress hormones, such as adrenaline and cortisol, according to a recent study in the Journal of Physical Therapy Science.

If you're over 50, a study published last month in the British Journal of Sports Medicinesuggests the best results come from combining aerobic and resistance exercise.

That could include anything from high-intensity interval training, like the 7-minute workout, to dynamic flow yoga, which intersperses strength-building poses like planks and push-ups with heart-pumping dance-like moves.

Another study published on May 3 provides some additional support to that research, finding that in adults aged 60-88, walking for 30 minutes four days a week for 12 weeks appeared to strengthen connectivity in a region of the brain where weakened connections have been linked with memory loss.

Researchers still aren't sure why this type of exercise appears to provide a boost to the brain, but studies suggest it has to do with increased blood flow, which provides our minds with fresh energy and oxygen.

And one recent study in older women who displayed potential symptoms of dementia found that aerobic exercise was linked with an increase in the size of the hippocampus, a brain area involved in learning and memory.

Joe Northey, the lead author of the British study and an exercise scientist at the University of Canberra, says his research suggests that anyone in good health over age 50 should do 45 minutes to an hour of aerobic exercise "on as many days of the week as feasible".

Read the original article Here

- Comments (1)

Subscribe to our Feed via RSS

Subscribe to our Feed via RSS